Webhooks What’s the worst that could happen?

Kubernetes Community Days UK

October 17th 2023

A presentation at Kubernetes Community Days UK in October 2023 in London, UK by Marcus Noble

Webhooks What’s the worst that could happen?

Kubernetes Community Days UK

October 17th 2023

Hi 👋, I’m Marcus Noble, a platform engineer at Giant Swarm

Find me on Mastodon at: @Marcus@k8s.social All my profiles / contact details at: MarcusNoble.com

6+ years experience running Kubernetes in production environments.

Webhooks in Kubernetes are ✨ POWERFUL ✨ But with that power comes😱 RISK 😱

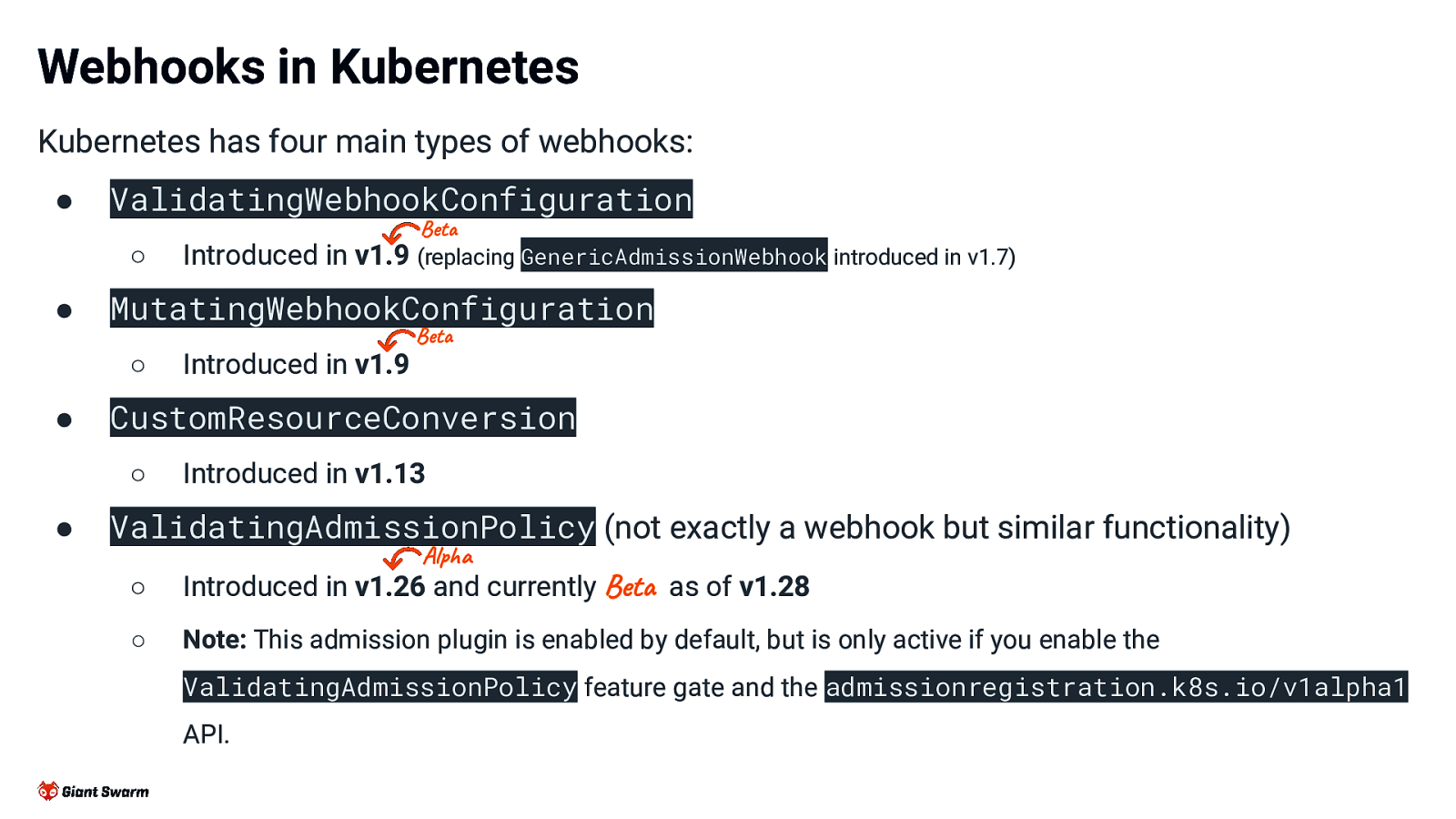

Webhooks in Kubernetes

Kubernetes has four main types of webhooks:

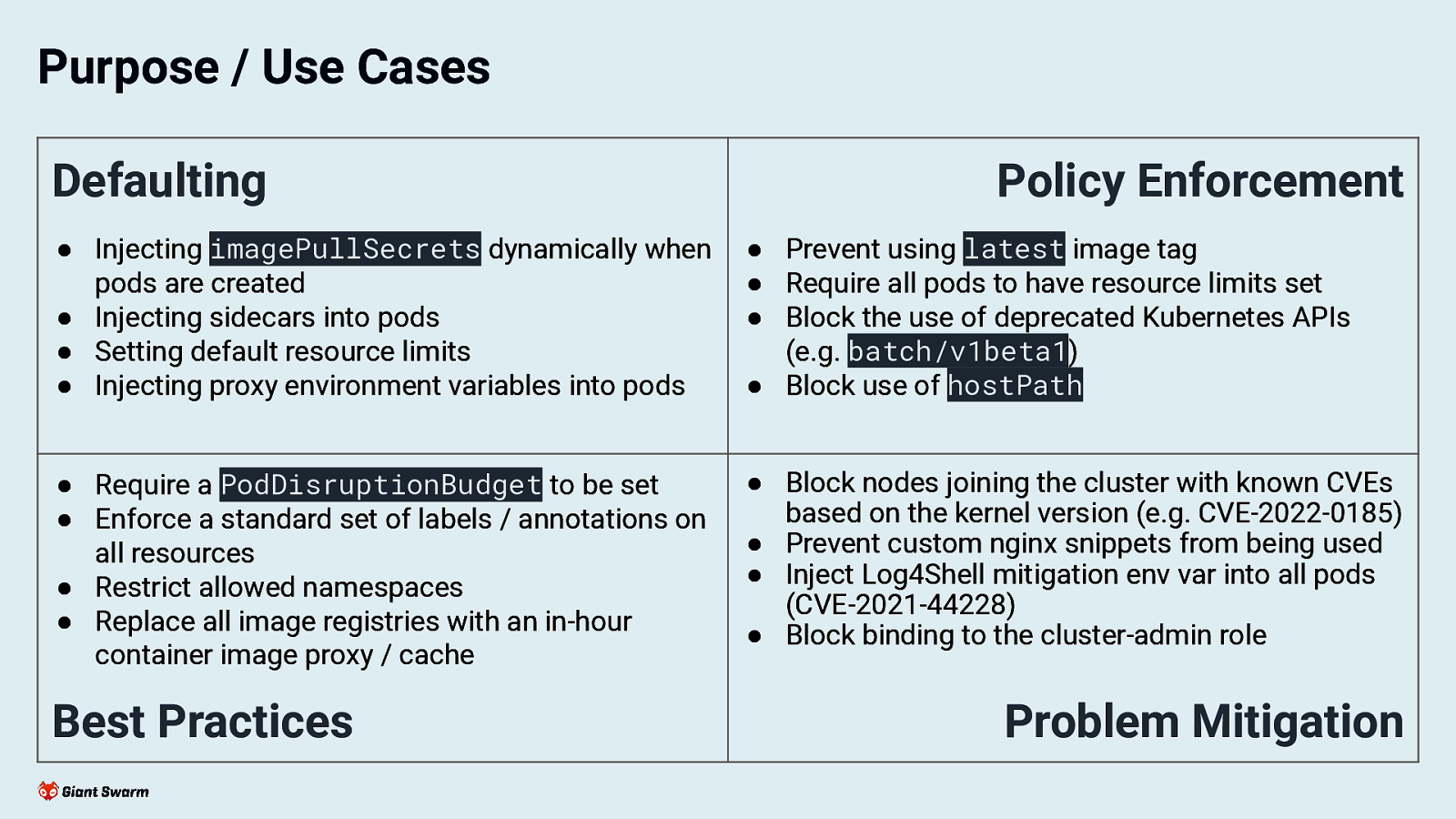

Purpose / Use Cases

Defaulting

Policy Enforcement

Best Practices

Problem Mitigation

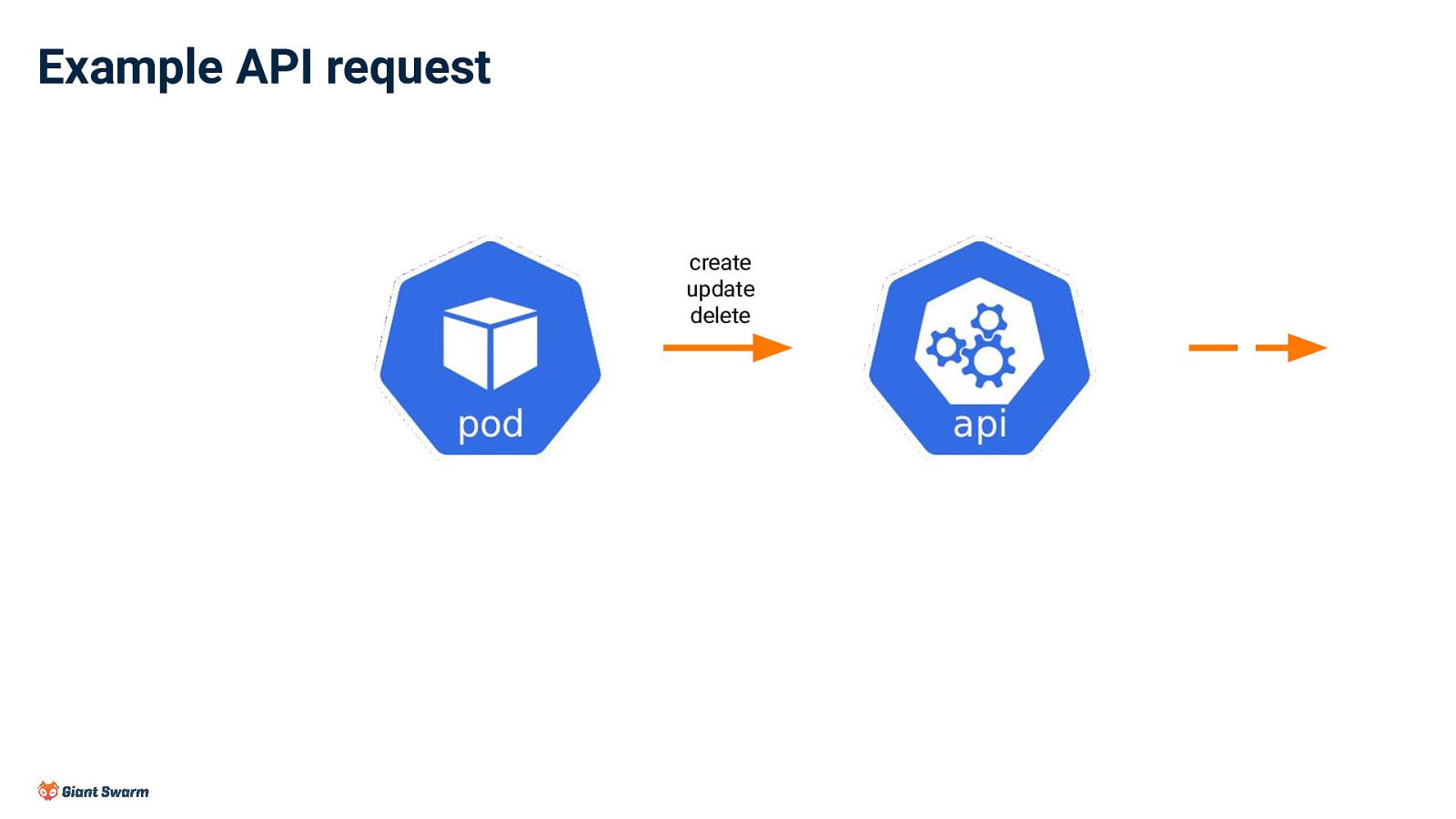

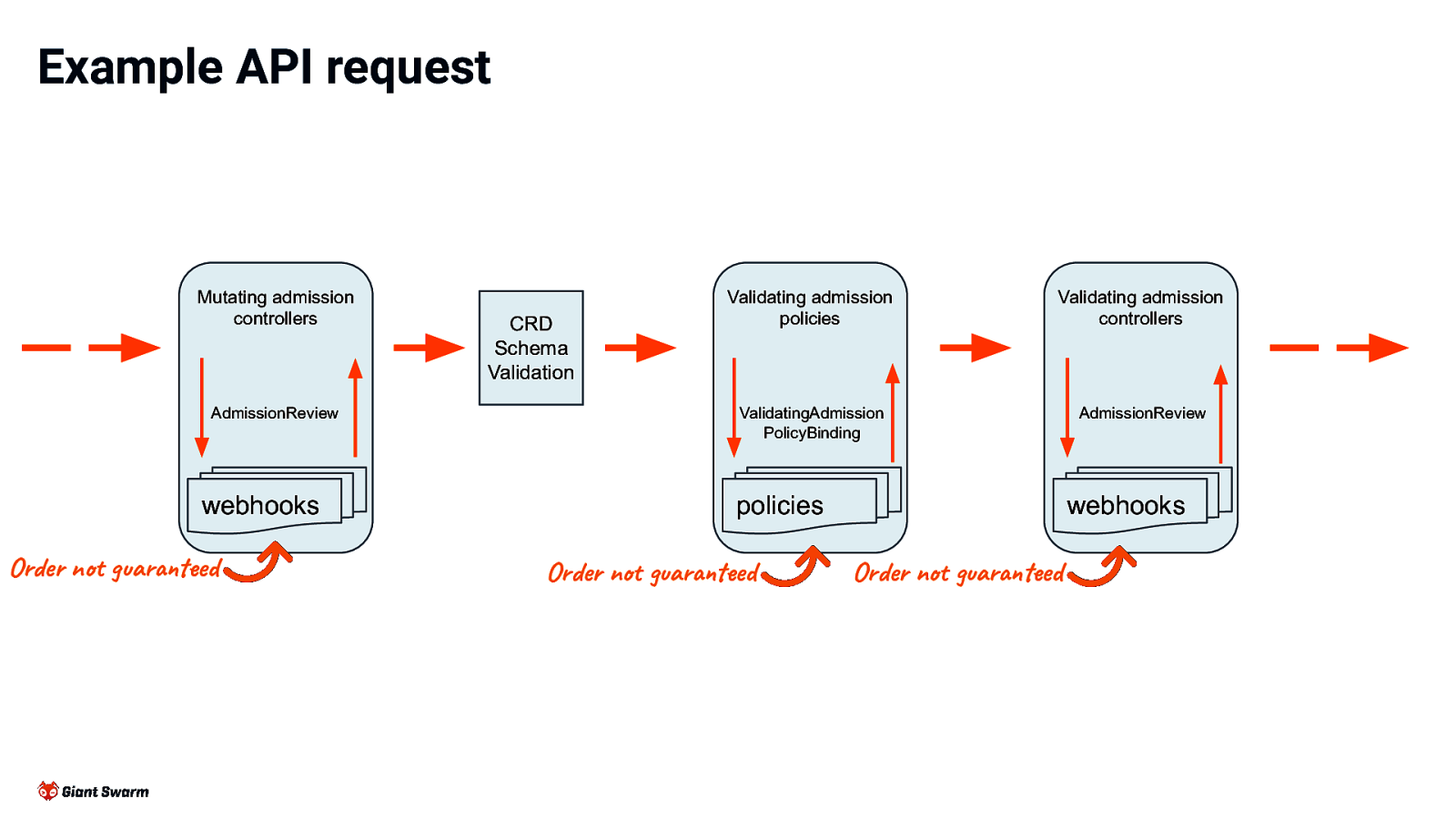

Example API request

Example API request

Example API request

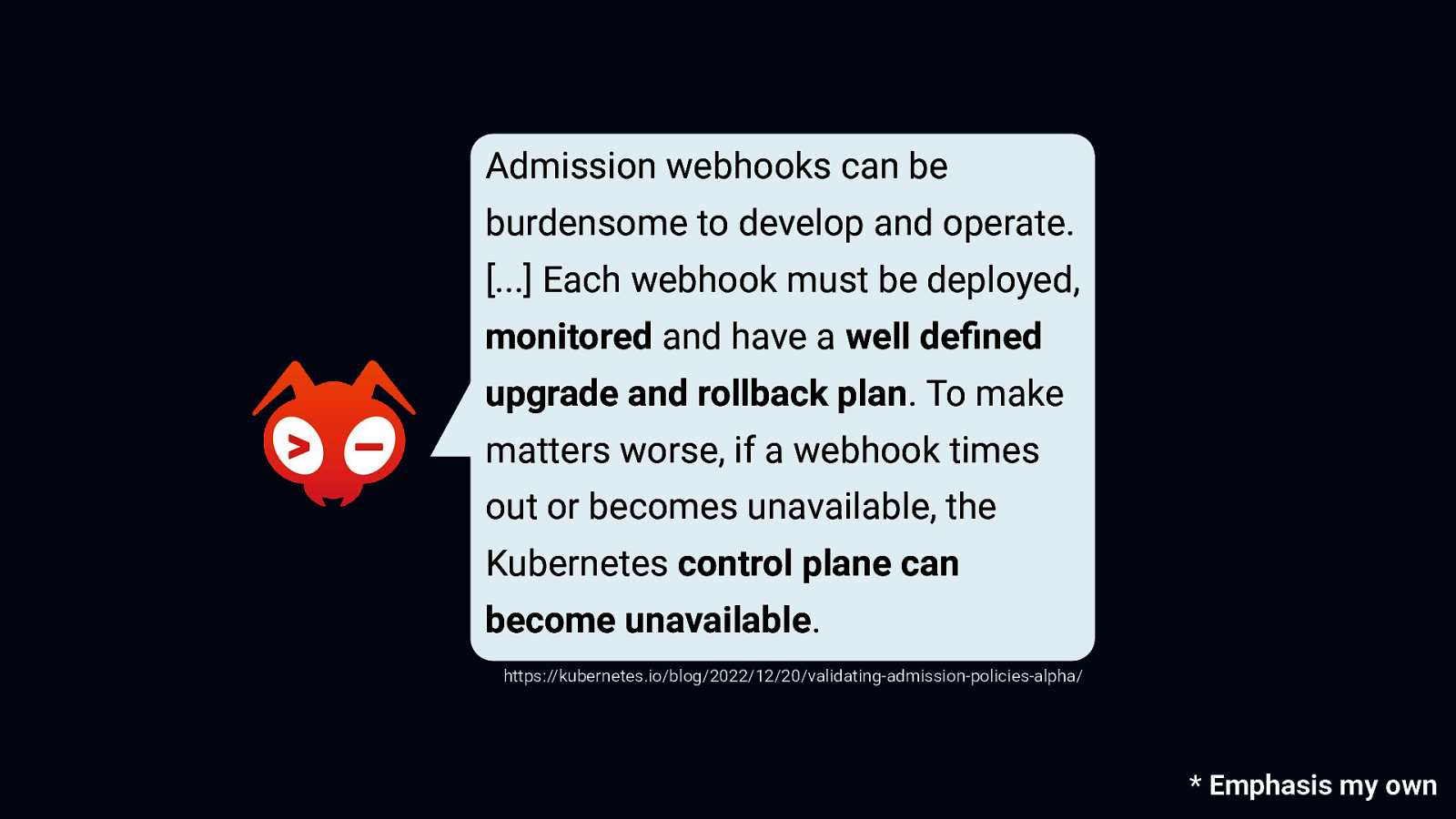

Sounds great, right!? So where’s the risk?

Admission webhooks can be burdensome to develop and operate. […] Each webhook must be deployed, monitored and have a well defined upgrade and rollback plan. To make matters worse, if a webhook times out or becomes unavailable, the Kubernetes control plane can become unavailable.

https://kubernetes.io/blog/2022/12/20/validating-admission-policies-alpha/

The Kubernetes control plane can become unavailable!

https://kubernetes.io/blog/2022/12/20/validating-admission-policies-alpha/

Let’s look at some numbers

I took at look at 129 clusters* and found that ALL of them had at least 1 validating and mutating webhooks in place and overall had an average of 9 validating and 7 mutating webhooks. The most validating webhooks in a single cluster was 25 and the most mutating webhooks was 15!

How bad can things get?

Let’s play a quick game I’ll show you scenarios of a misconfigured or malicious webhook and through a show of hands I want you all to let me know if you think it’ll cause problems in the cluster. 🙋

Different content type In this scenario we’re going to return a valid Content-Type header but one that doesn’t match the content being returned. 🙋 I think this will break things 💁 The cluster will handle that just fine

Different content type - ✅ The api-server rejects any webhook responses that aren’t JSON (or YAML) regardless of their Content-Type header. The header value is actually ignored. 🙋 I think this will break things 💁 The cluster will handle that just fine

Cut off response In this scenario we’re going to set the Content-Length response header to be longer than the actual response body. 🙋 I think this will break things 💁 The cluster will handle that just fine

Cut off response - ✅ The api-server returns an error of unexpected EOF. 🙋 I think this will break things 💁 The cluster will handle that just fine

Redirect This scenario responds to all webhook requests with a redirect to a service that infinitely redirects the client. 🙋 I think this will break things 💁 The cluster will handle that just fine

Redirect - ✅ The api-server stops following the redirects after 10 redirects. 🙋 I think this will break things 💁 The cluster will handle that just fine

Reinvocation This scenario configures two mutating webhooks with a reinvocationPolicy set to IfNeeded. Both webhooks will mutate the object, causing the other webhook to be triggered again. 🙋 I think this will break things 💁 The cluster will handle that just fine

Reinvocation - ✅ Each webhook is triggered 2 times and then no more. The api-server keeps track of how many times it has called a specific webhook and avoid calling it endlessly. From the Kubernetes documentation: > if additional invocations result in further modifications to the object, webhooks are not guaranteed to be invoked again. 🙋 I think this will break things 💁 The cluster will handle that just fine

“Fork bomb” In this scenario our webhook handler generates a new Event resource to record that the webhook was triggered. This in turn triggers our webhook against the Event resource which generates another Event and so on. 🙋 I think this will break things 💁 The cluster will handle that just fine

“Fork bomb” - 🤷 The cluster will be find initially, providing the webhook handler doesn’t wait for successful creation of the Event. But, a couple of things might happen here: ● The api-server may DoS itself with too many requests if the cluster is active enough. ● In the background the Events in the cluster are building up. This number will keep increasing, leading to usage of etcd storage and resources. Depending on cluster configuration (see the —event-ttl api-server flag) this could potentially take down etcd eventually and cause a cluster outage. 🙋 I think this will break things 💁 The cluster will handle that just fine

Data overload In this scenario we’re going to return as much data as possible in the response to the api-server. To achieve this we’re piping random data from the crypto/rand package to the response writer. 🙋 I think this will break things 💁 The cluster will handle that just fine

Data overload - 🔥 The api-server completely locks up and stops responding to any further api calls. ● Restarting of api-server temporary fixes the issue until the webhook is next triggered. ● Reducing the timeoutSeconds seems to help the api-server handle the webhook calls but the default 10s causes it to lockup. 🙋 I think this will break things 💁 The cluster will handle that just fine

The Actual Risk

The Actual Risks of Webhooks

While webhooks themselves are rarely the cause of cluster problems, they have a tendency to exacerbate them. As webhooks are often on the critical path, e.g. pod creation, if they fail they can cause new problems to surface or existing problems to become harder to handle!

The Risks of Webhook

tl;dr; - If a webhook isn’t built and configured with resilience in mind it’s possible that a failing webhook can block the creation of critical pods, such as the api-server, and eventually take down the whole cluster if not caught quickly.

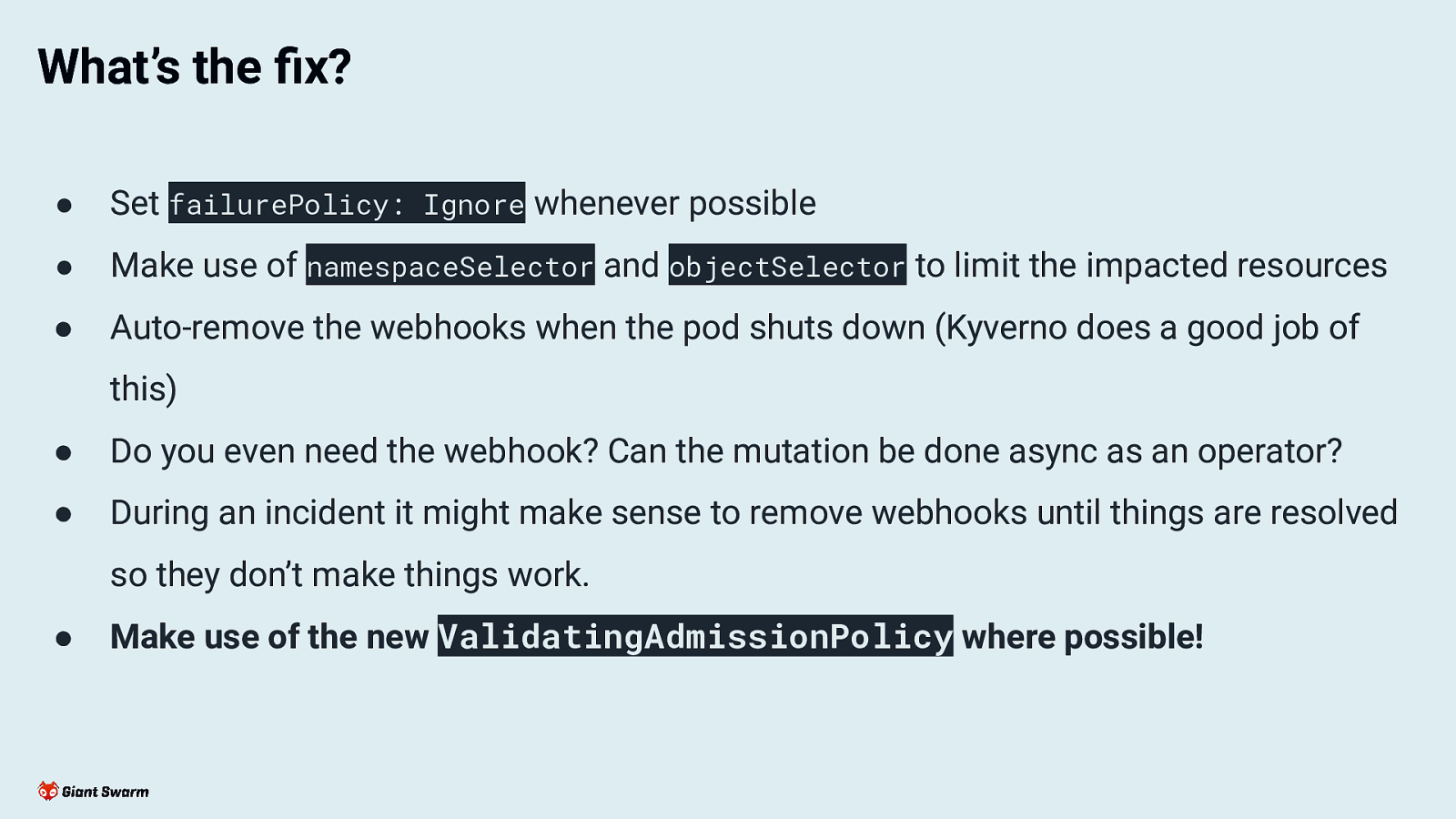

What’s the fix?

What’s the fix?

● Set failurePolicy: Ignore whenever possible ● Make use of namespaceSelector and objectSelector to limit the impacted resources ● Auto-remove the webhooks when the pod shuts down (Kyverno does a good job of this) ● Do you even need the webhook? Can the mutation be done async as an operator? ● During an incident it might make sense to remove webhooks until things are resolved so they don’t make things work. ● Make use of the new ValidatingAdmissionPolicy where possible!

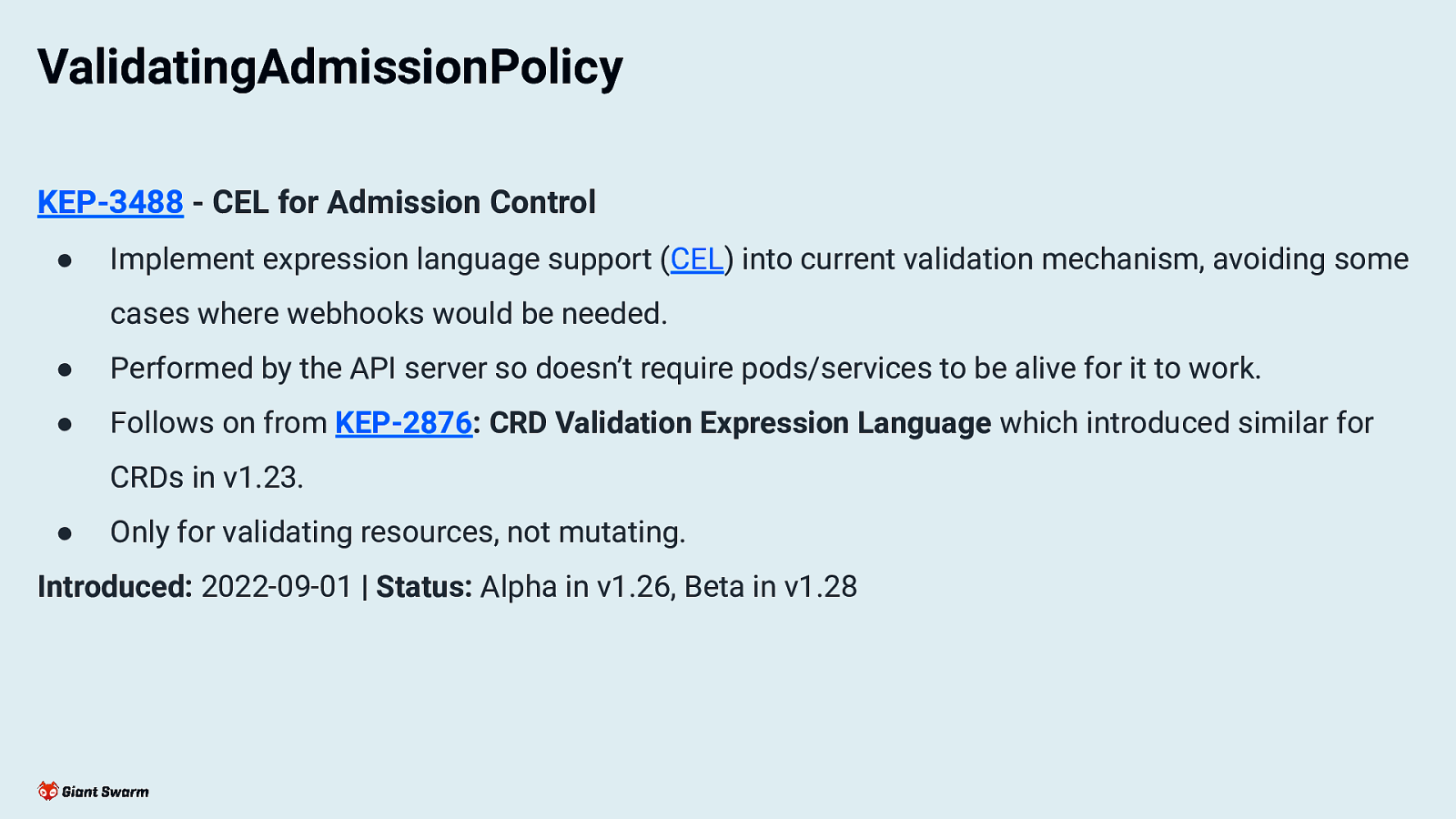

ValidatingAdmissionPolicy

KEP-3488 - CEL for Admission Control ● Implement expression language support (CEL) into current validation mechanism, avoiding some cases where webhooks would be needed. ● Performed by the API server so doesn’t require pods/services to be alive for it to work. ● Follows on from KEP-2876: CRD Validation Expression Language which introduced similar for CRDs in v1.23. ● Only for validating resources, not mutating.

Introduced: 2022-09-01 | Status: Alpha in v1.26, Beta in v1.28

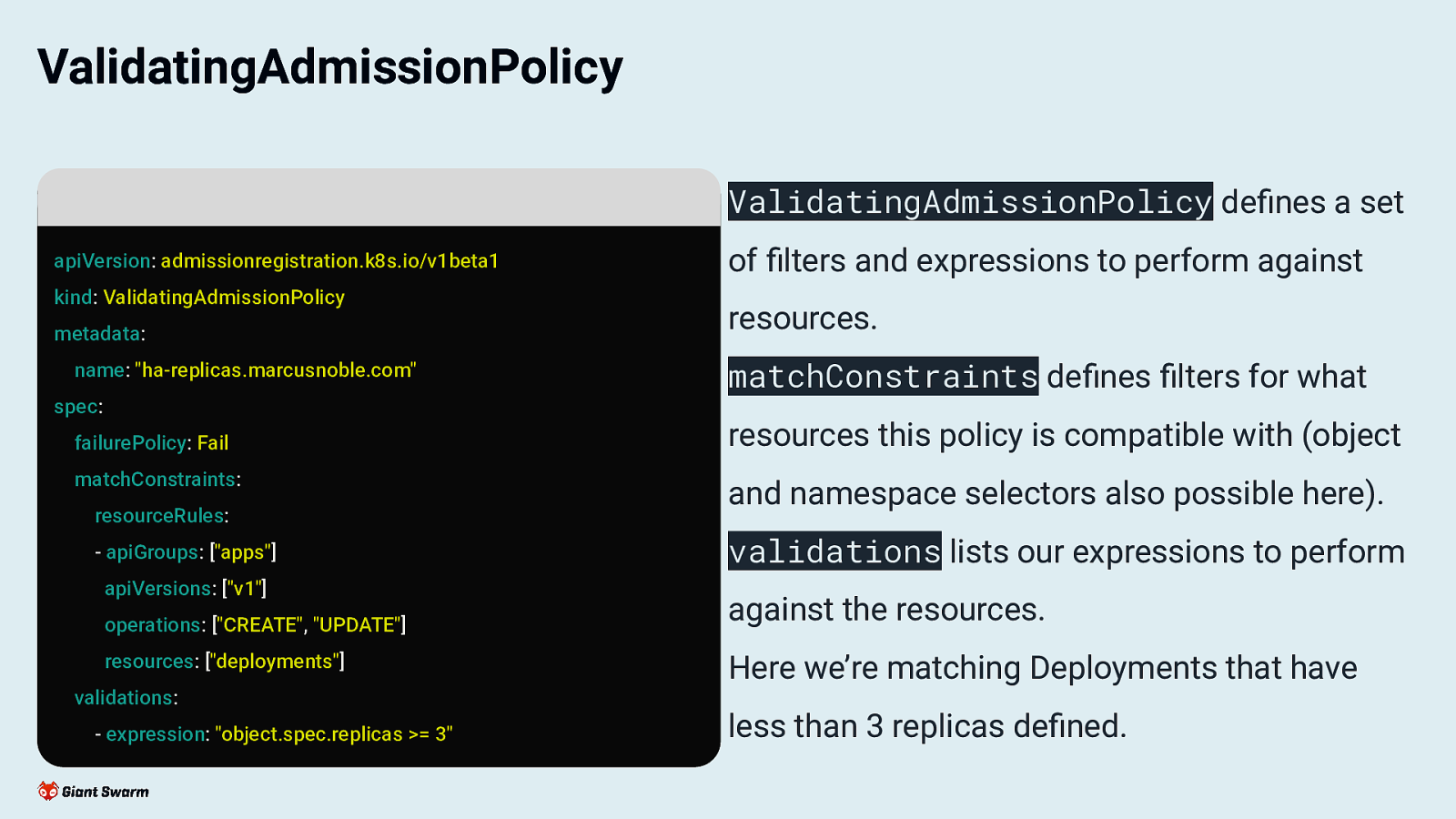

ValidatingAdmissionPolicy

ValidatingAdmissionPolicy defines a set of filters and expressions to perform against resources. matchConstraints defines filters for what resources this policy is compatible with (object and namespace selectors also possible here). validations lists our expressions to perform against the resources. Here we’re matching Deployments that have less than 3 replicas defined.

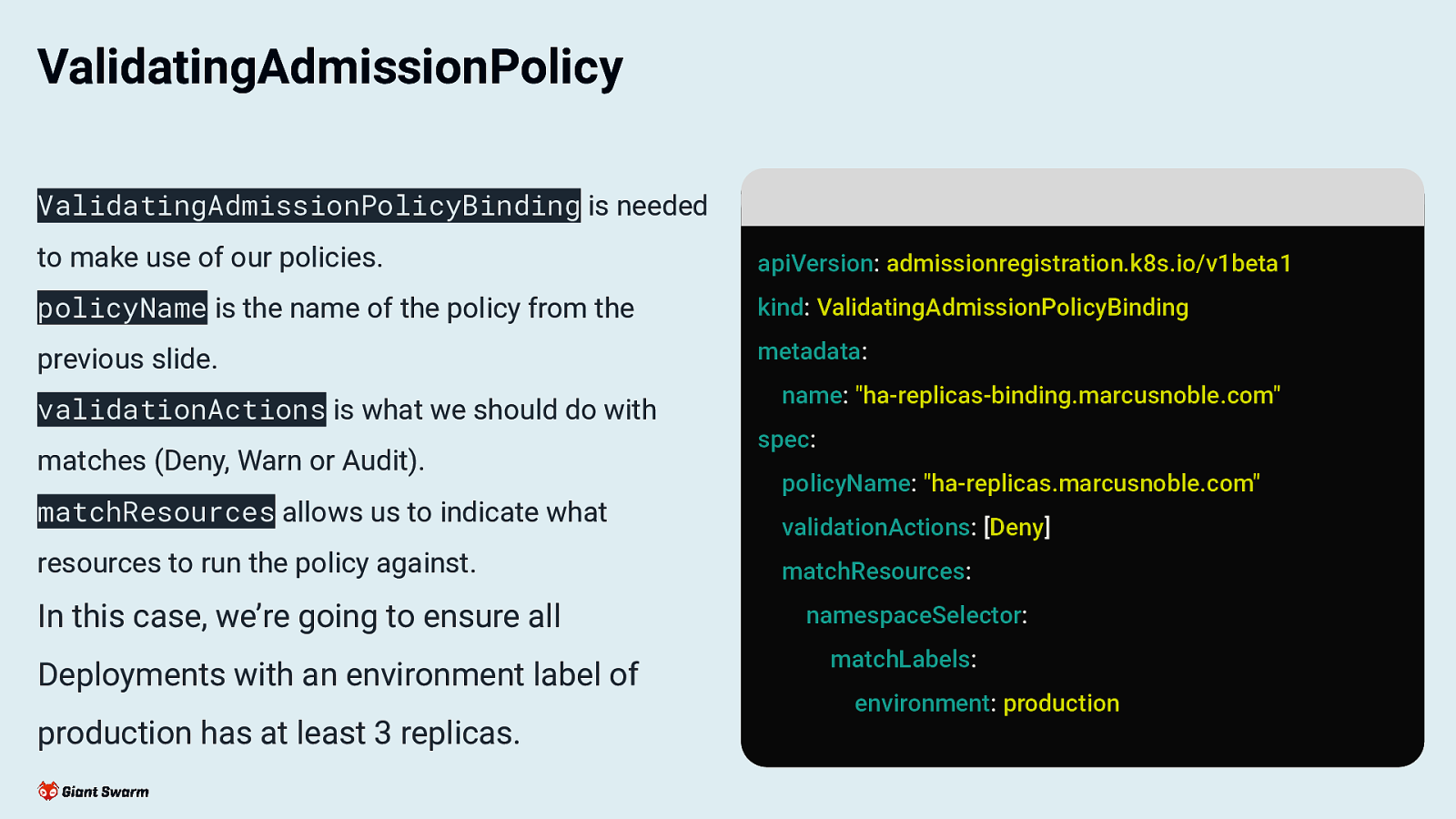

ValidatingAdmissionPolicy

ValidatingAdmissionPolicyBinding is needed to make use of our policies. apiVersion: admissionregistration.k8s.io/v1beta1 policyName is the name of the policy from the kind: ValidatingAdmissionPolicyBinding previous slide. metadata: validationActions is what we should do with matches (Deny, Warn or Audit). matchResources allows us to indicate what resources to run the policy against. In this case, we’re going to ensure all Deployments with an environment label of production has at least 3 replicas.

name: “ha-replicas-binding.marcusnoble.com” spec: policyName: “ha-replicas.marcusnoble.com” validationActions: [Deny] matchResources: namespaceSelector: matchLabels: environment: production

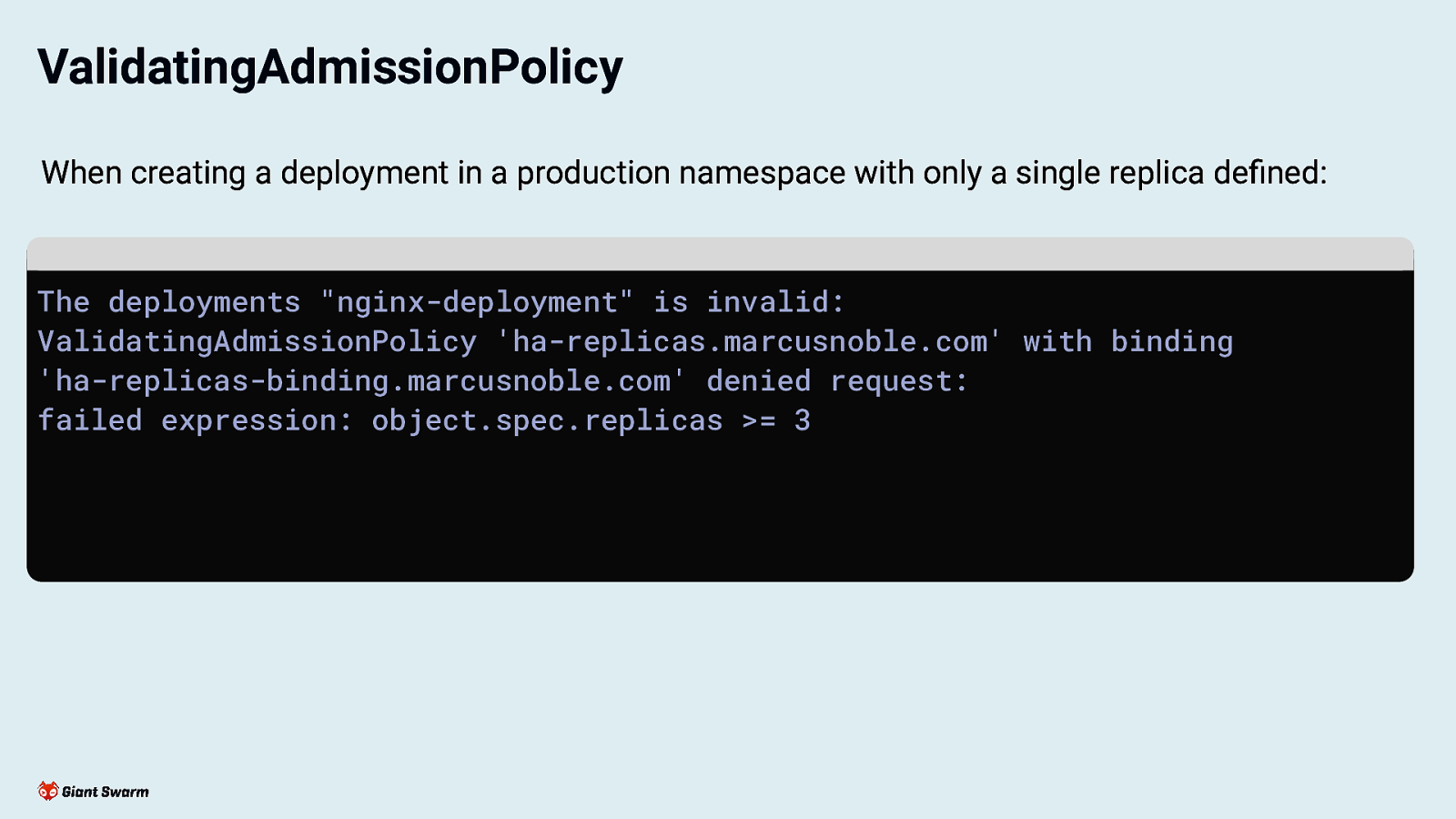

ValidatingAdmissionPolicy

When creating a deployment in a production namespace with only a single replica defined:

The deployments “nginx-deployment” is invalid: ValidatingAdmissionPolicy ‘ha-replicas.marcusnoble.com’ with binding ‘ha-replicas-binding.marcusnoble.com’ denied request: failed expression: object.spec.replicas >= 3

ValidatingAdmissionPolicy

More features: ● Parameters ● Warning message expressions ● Variables ● Match Conditions ● Auditing

https://kubernetes.io/docs/reference/access-authn-authz/validating-admission-policy/

Summary ● Webhooks are rarely the cause of incidents but to have a tendency to make them worse ● Beware the critical path of API requests ● Ensure your webhooks are built to be resilient and highly-available ● Where possible, move to using ValidatingAdmissionPolicy instead