Managing Kubernetes without losing your cool Giant Swarm Webinar May 3rd 2022

Slide 1

Slide 2

Hi, I’m Marcus Noble, a platform engineer at Giant Swarm.

I’m found around the web as AverageMarcus in most places and @Marcus_Noble_ on Twitter

~5 years experience running Kubernetes in production environments.

Slide 3

My 10 tips for working with Kubernetes

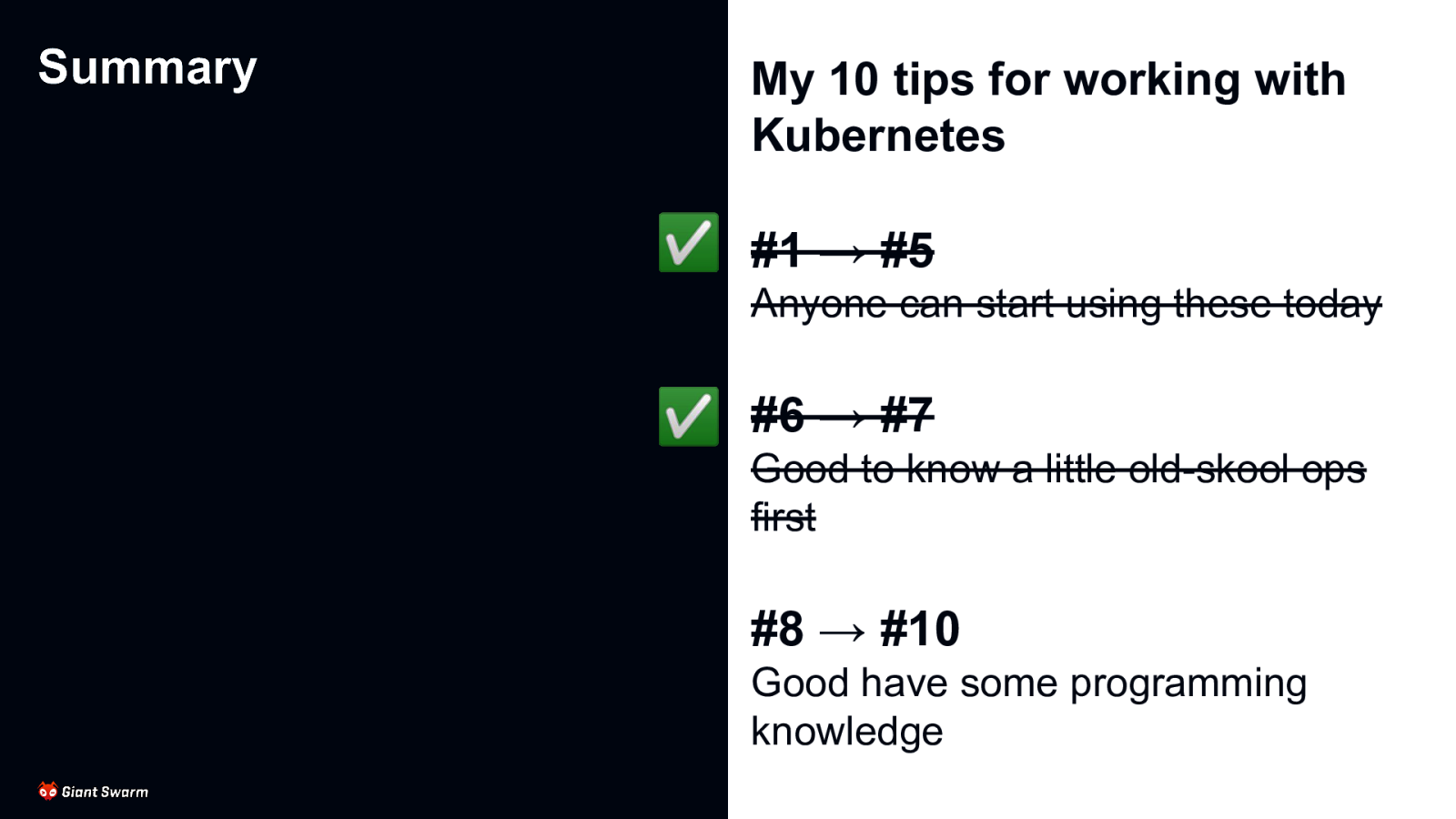

#1 → #5 Anyone can start using these today #6 → #7 Good to know a little old-skool ops first #8 → #10 Good have some programming knowledge

Slide 4

#0 - Pay someone else to deal with it

OK, this one is kinda tongue in cheek but worth mentioning. If you have dozens or hundreds of clusters on-top of other development work you’re going to be stretched thin. Getting someone else to manage things while you focus on what makes your business money can often be the right choice.

Slide 5

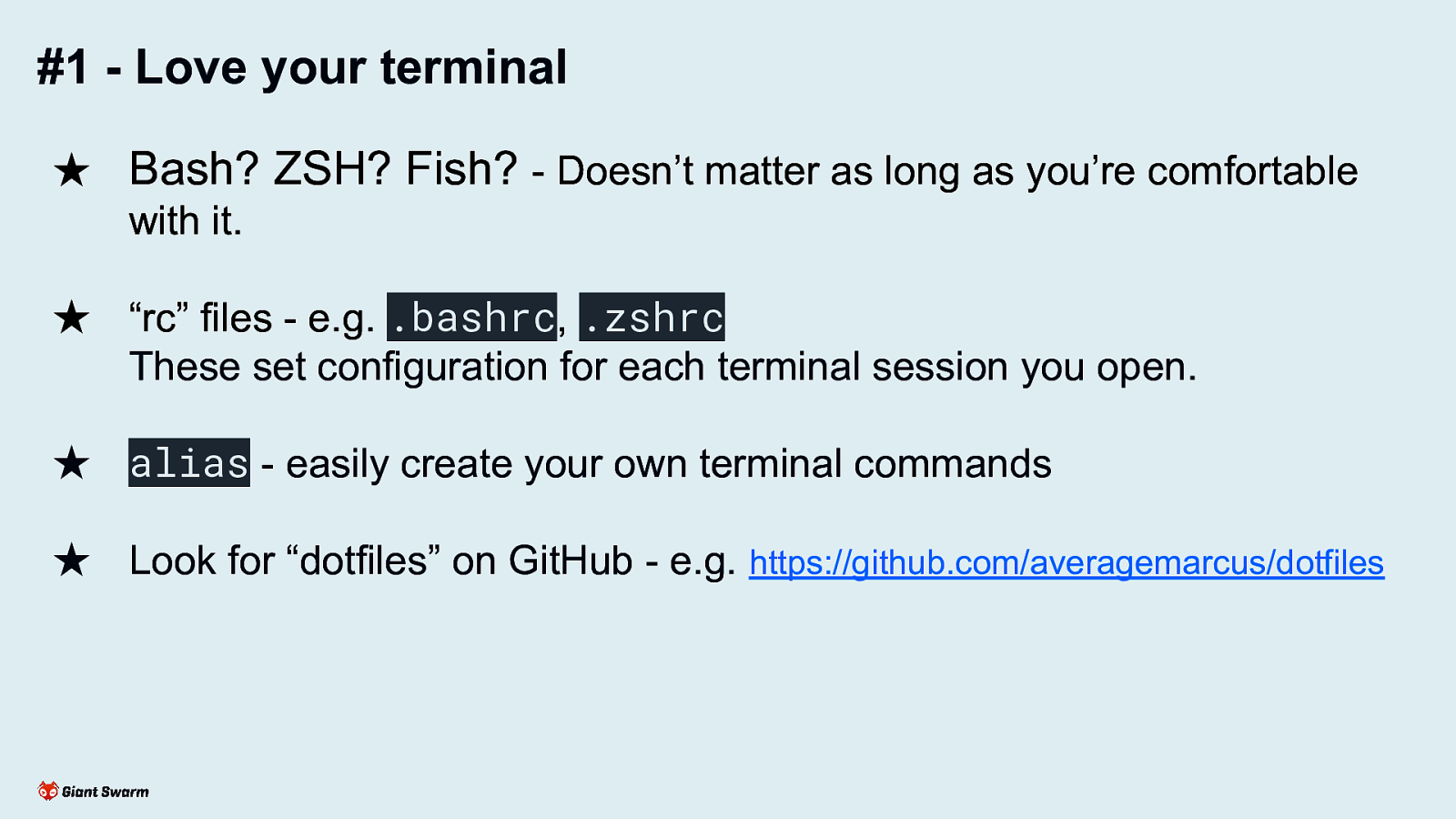

#1 - Love your terminal

Slide 6

#1 - Love your terminal

★ Bash? ZSH? Fish? - Doesn’t matter as long as you’re comfortable with it. ★ “rc” files - e.g. .bashrc, .zshrc These set configuration for each terminal session you open. ★ alias - easily create your own terminal commands ★ Look for “dotfiles” on GitHub - e.g. https://github.com/averagemarcus/dotfiles

Create your own workflow of tasks you perform often. Avoid typos and ”fat fingering” by replacing long, complex commands with short aliases (bonus points for adding help text to remind you later)

Slide 7

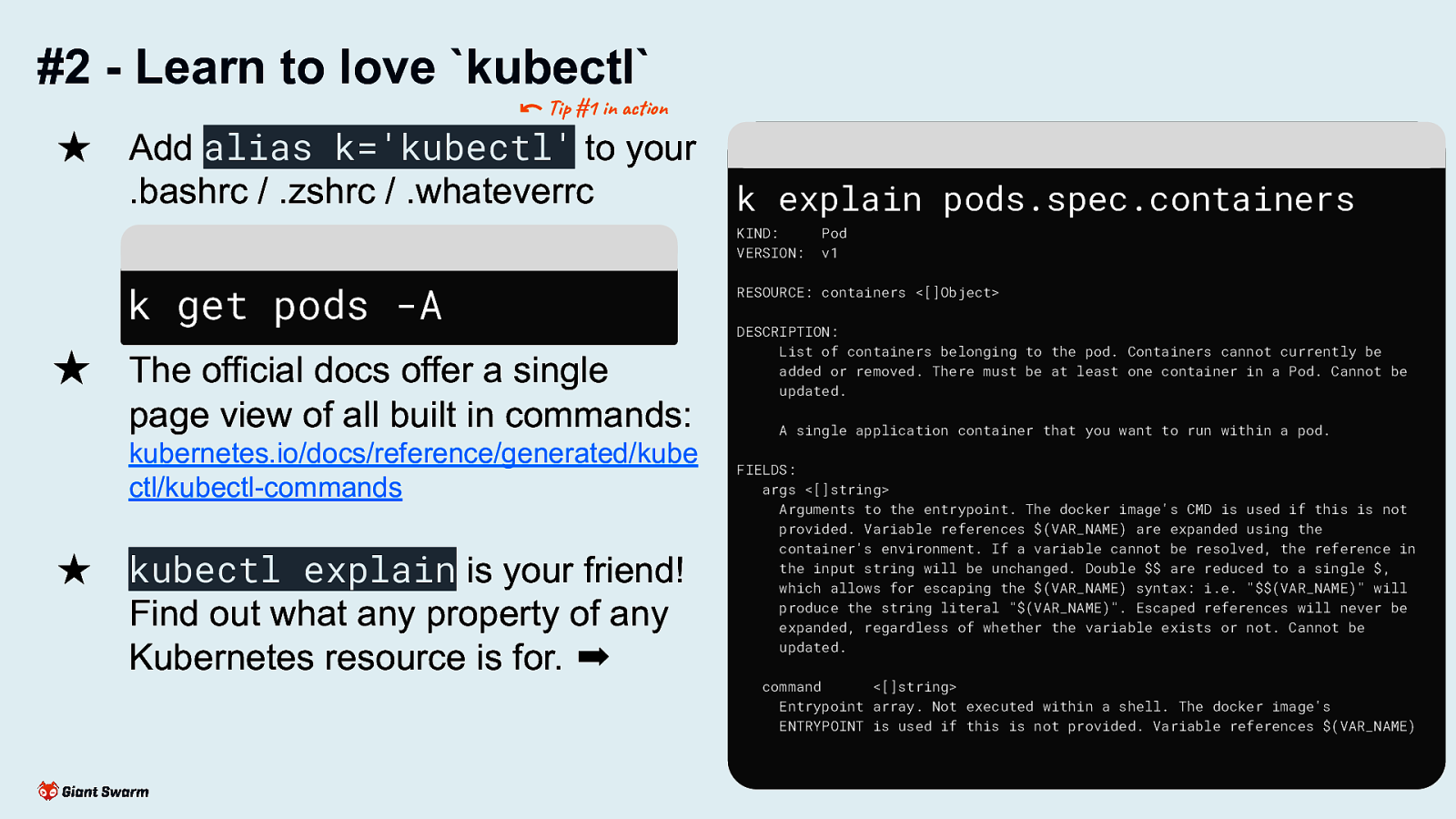

#2 - Learn to love kubectl

Slide 8

#2 - Learn to love kubectl

★ Add alias k=’kubectl’ to your .bashrc / .zshrc / .whateverrc

★ The official docs offer a single page view of all built in commands: kubernetes.io/docs/reference/generated/kube ctl/kubectl-commands

★ kubectl explain is your friend! Find out what any property of any Kubernetes resource is for.

Save time by only typing k. Kubectl explain for digging into resources and their properties (useful when you can’t access the official docs or know exactly what you’re looking for)

Slide 9

#3 - Multiple kubeconfigs

Slide 10

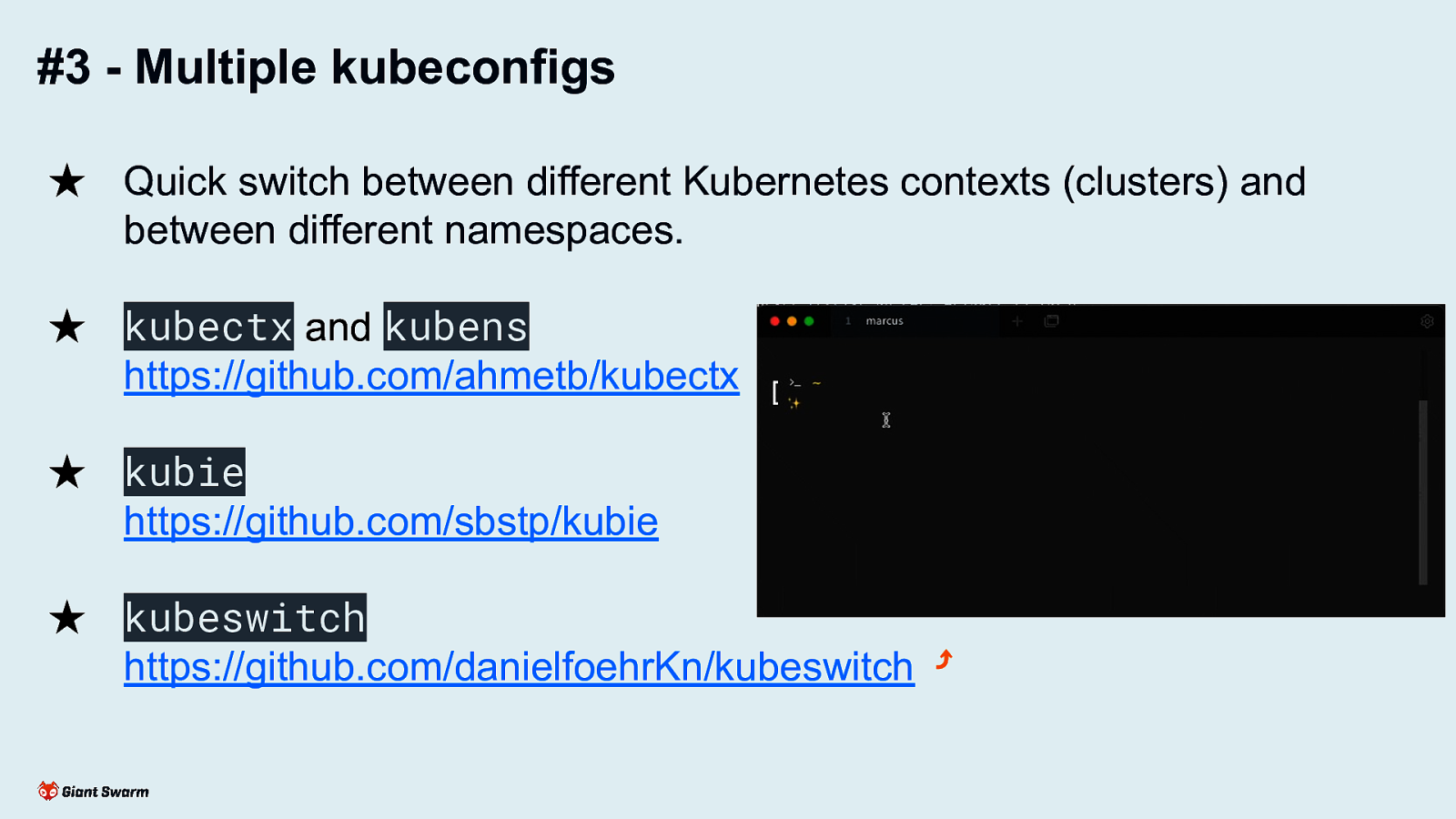

#3 - Multiple kubeconfigs

★ Quick switch between different Kubernetes contexts (clusters) and between different namespaces. ★ kubectx and kubens https://github.com/ahmetb/kubectx ★ kubie https://github.com/sbstp/kubie ★ kubeswitch https://github.com/danielfoehrKn/kubeswitch

kubeswitch my fave as it supports directory of kubeconfigs to make organising easier

Slide 11

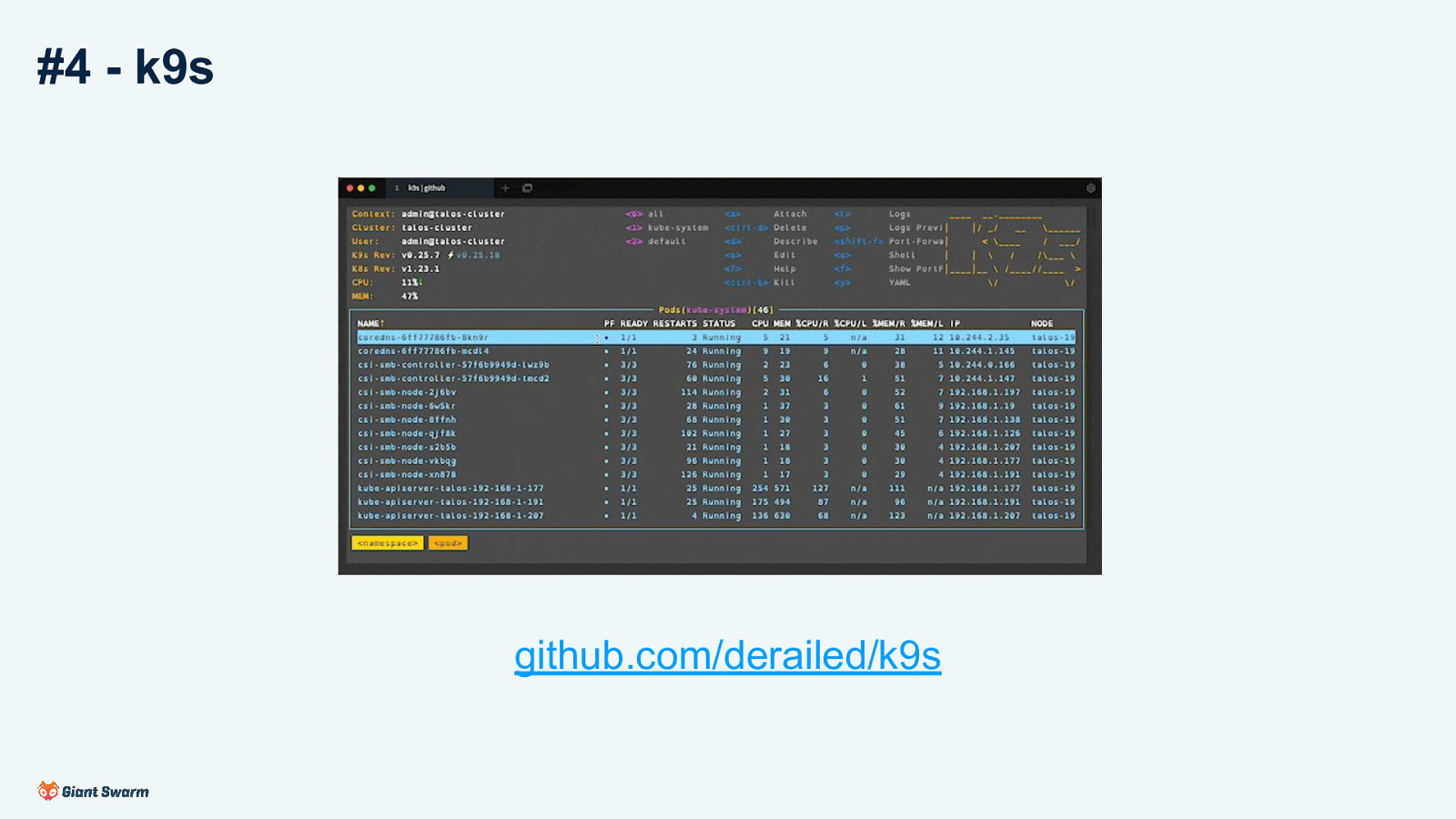

#4 - k9s

Slide 12

#4 - k9s

github.com/derailed/k9s

Interactive terminal. Supports all resource types and actions. Lots of keybinding and similar to quickly work with a cluster. Find, view, edit, port forward, view logs, delete, etc.

Slide 13

#5 - kubectl plugins

Slide 14

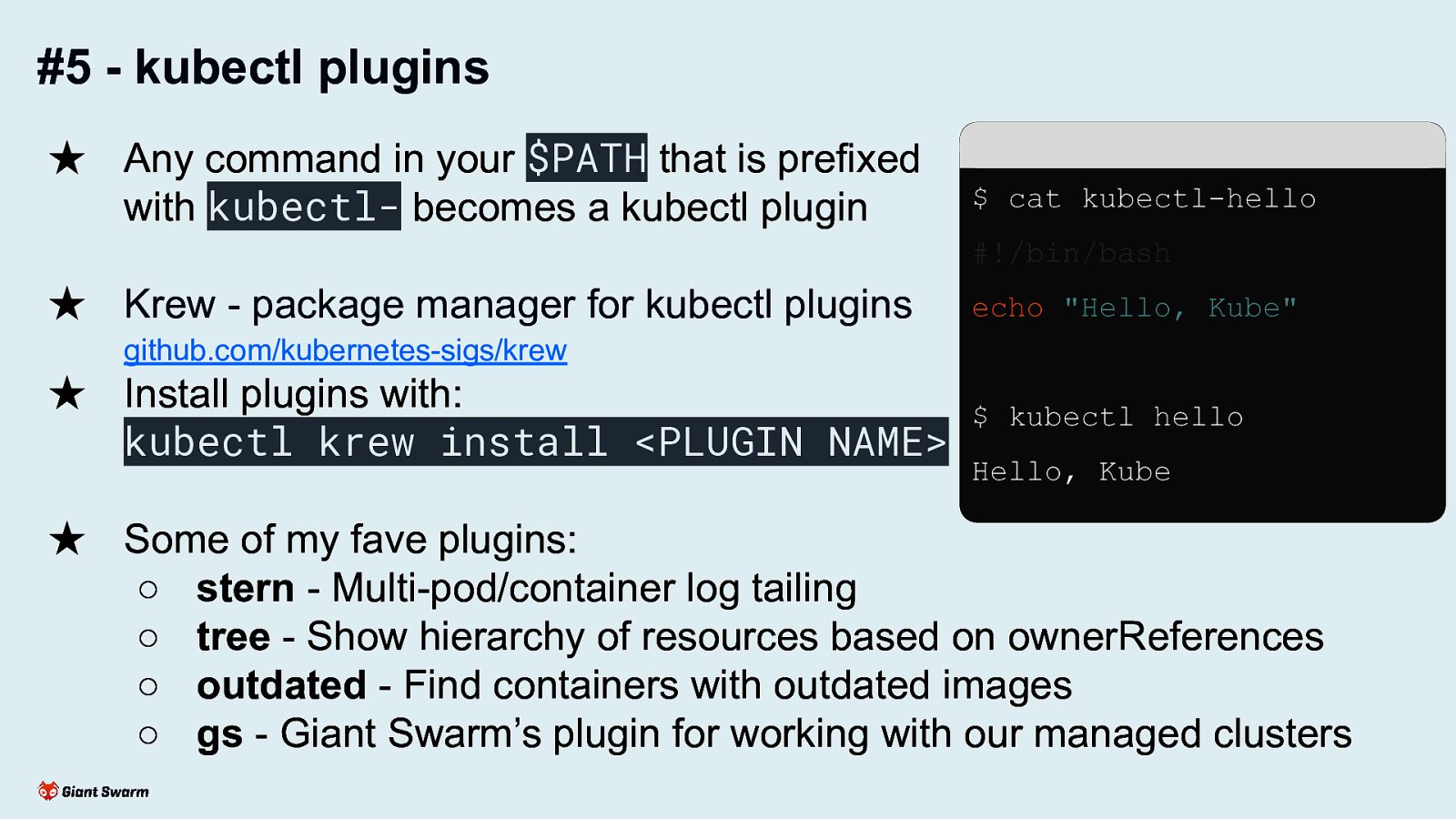

#5 - kubectl plugins

★ Any command in your $PATH that is prefixed with kubectl- becomes a kubectl plugin

★ Krew - package manager for kubectl plugins - github.com/kubernetes-sigs/krew

★ Install plugins with: kubectl krew install <PLUGIN NAME>

★ Some of my fave plugins: ○ stern - Multi-pod/container log tailing ○ tree - Show hierarchy of resources based on ownerReferences ○ outdated - Find containers with outdated images ○ gs - Giant Swarm’s plugin for working with our managed clusters

Plugins can be in any language. You can easily add your own by creating Bash scripts with a kubectl- prefixed name. Note: autocomplete is a bit trickier here. Some plugins support it but generally expect your tabcompletion to only recommend core kubectl features.

Slide 15

My 10 tips for working with Kubernetes

#1 → #5 Anyone can start using these today ✅ #6 → #7 Good to know a little old-skool ops first #8 → #10 Good have some programming knowledge

Not so scary so far, right? Now on to a little more hands-on techniques.

Slide 16

#6 - kshell / kubectl debug

Slide 17

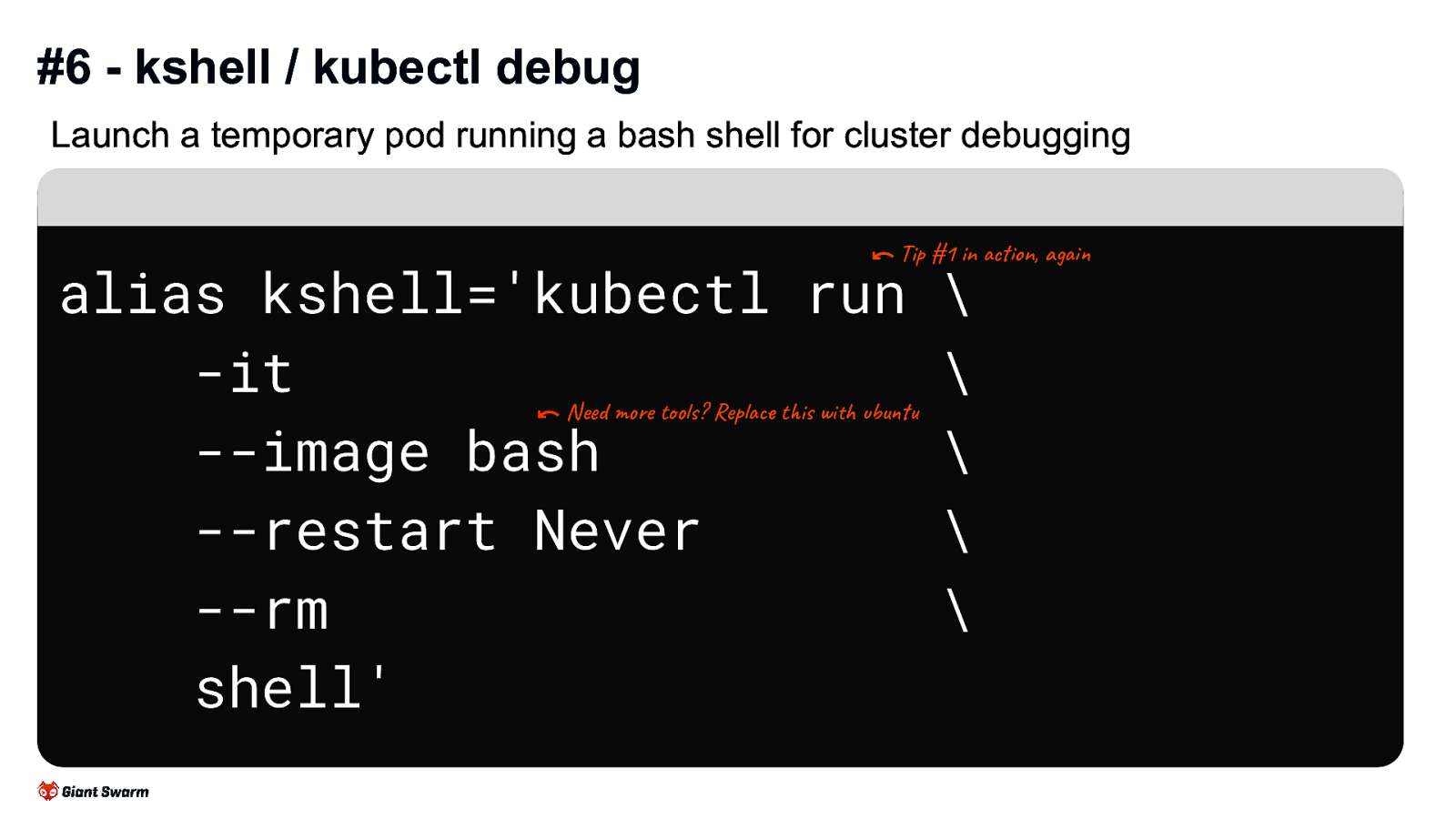

#6 - kshell / kubectl debug Launch a temporary pod running a bash shell for cluster debugging

alias kshell=’kubectl run -it —image bash —restart Never —rm shell’

Great for more general debugging of a cluster, especially with networking issues or similar.

Slide 18

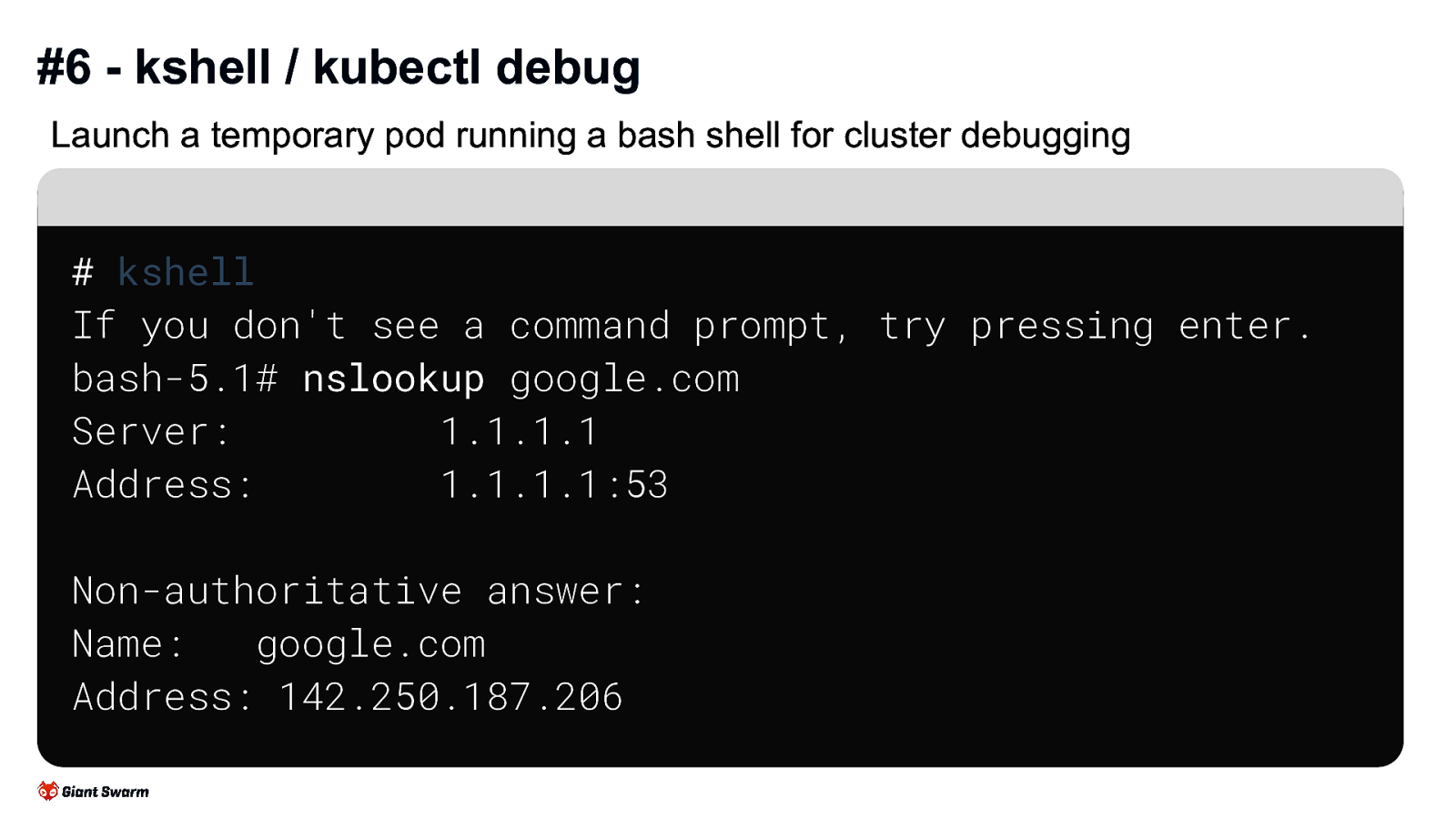

#6 - kshell / kubectl debug Launch a temporary pod running a bash shell for cluster debugging

kshell

If you don’t see a command prompt, try pressing enter. bash-5.1# nslookup google.com Server: 1.1.1.1 Address: 1.1.1.1:53

Non-authoritative answer: Name: google.com Address: 142.250.187.206

Great for more general debugging of a cluster, especially with networking issues or similar.

Slide 19

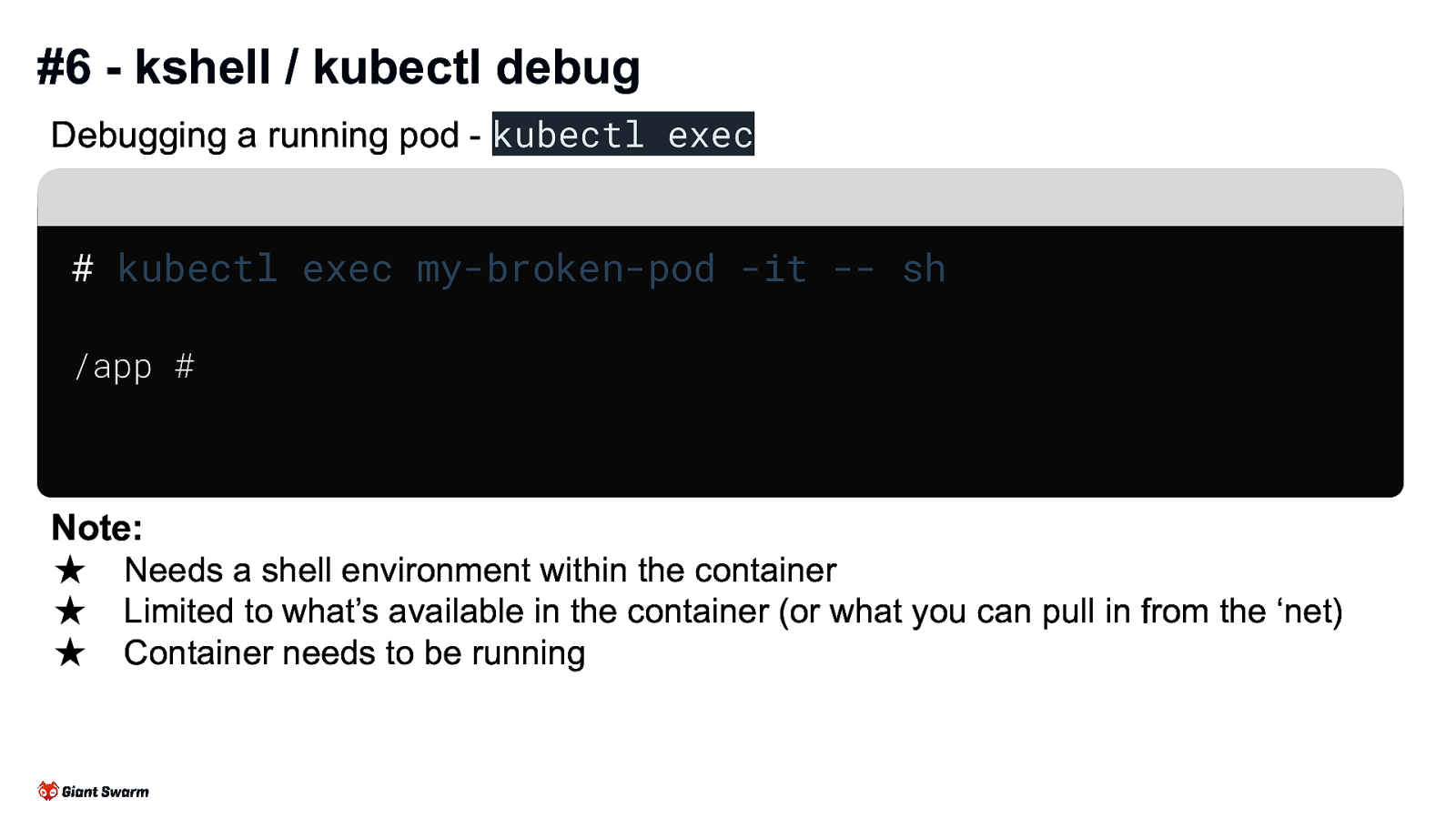

#6 - kshell / kubectl debug

Debugging a running pod - kubectl exec

kubectl exec my-broken-pod -it — sh

/app #

Note:

- Needs a shell environment within the container

- Limited to what’s available in the container (or what you can pull in from the ‘net)

- Container needs to be running

kubectl exec is great for debugging misconfigured pods that aren’t crashing and have enough OS to exec into. But… If the pod is CrashLooping you’ll get kicked out of the session when it crashes. If the pod doesn’t have a shell you can exec into (e.g. a container that only has a Golang binary) you’ll not be able to exec kubectl debug is great for pods that either don’t have any OS

Slide 20

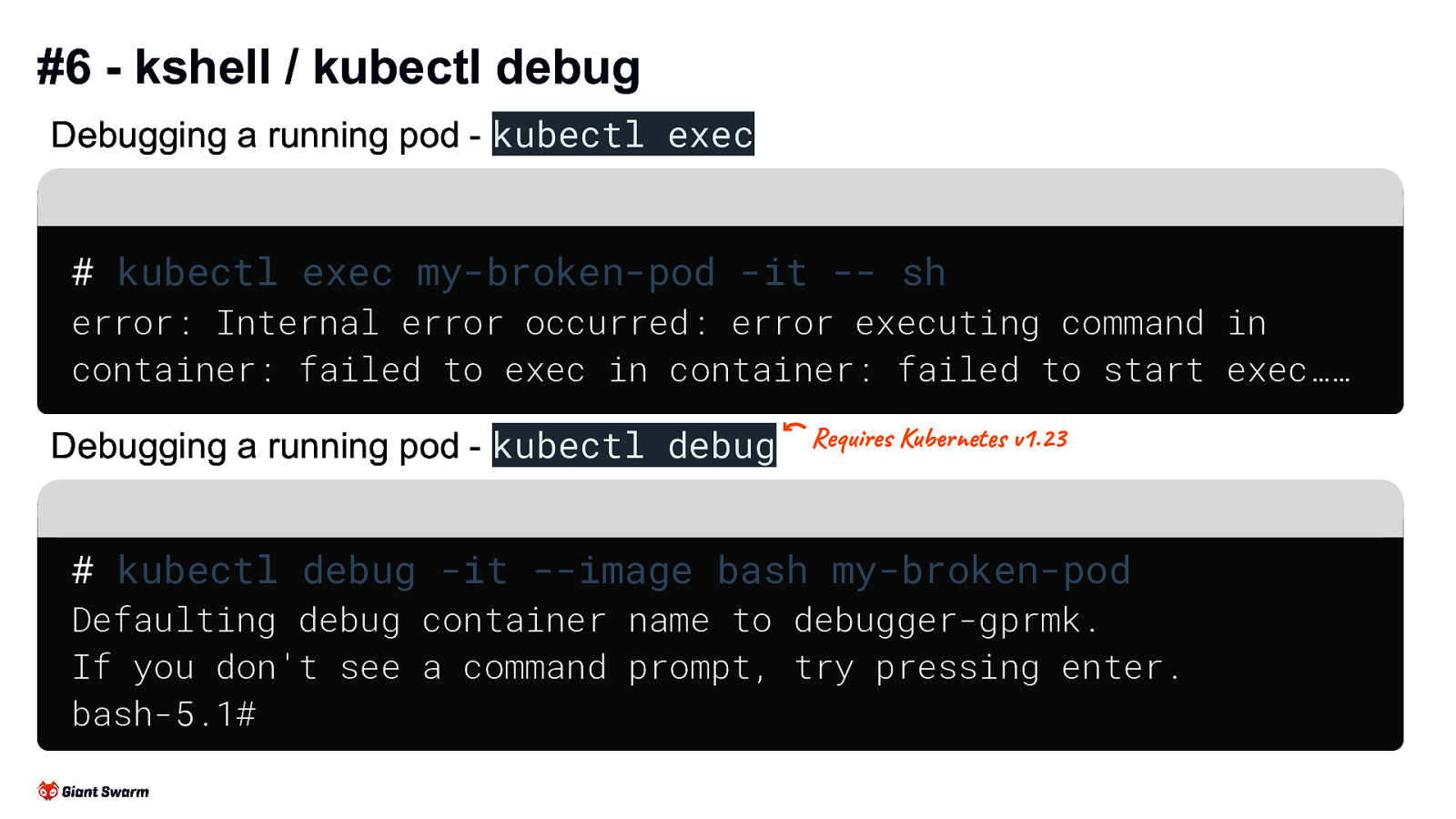

#6 - kshell / kubectl debug

Debugging a running pod - kubectl exec

kubectl exec my-broken-pod -it — sh

error: Internal error occurred: error executing command in container: failed to exec in container: failed to start exec……

Debugging a running pod - kubectl debug (Requires Kubernetes v1.23)

kubectl debug -it —image bash my-broken-pod

Defaulting debug container name to debugger-gprmk. If you don’t see a command prompt, try pressing enter. bash-5.1#

kubectl exec is great for debugging misconfigured pods that aren’t crashing and have enough OS to exec into. But… If the pod is CrashLooping you’ll get kicked out of the session when it crashes. If the pod doesn’t have a shell you can exec into (e.g. a container that only has a Golang binary) you’ll not be able to exec kubectl debug is great for pods that either don’t have any OS

Slide 21

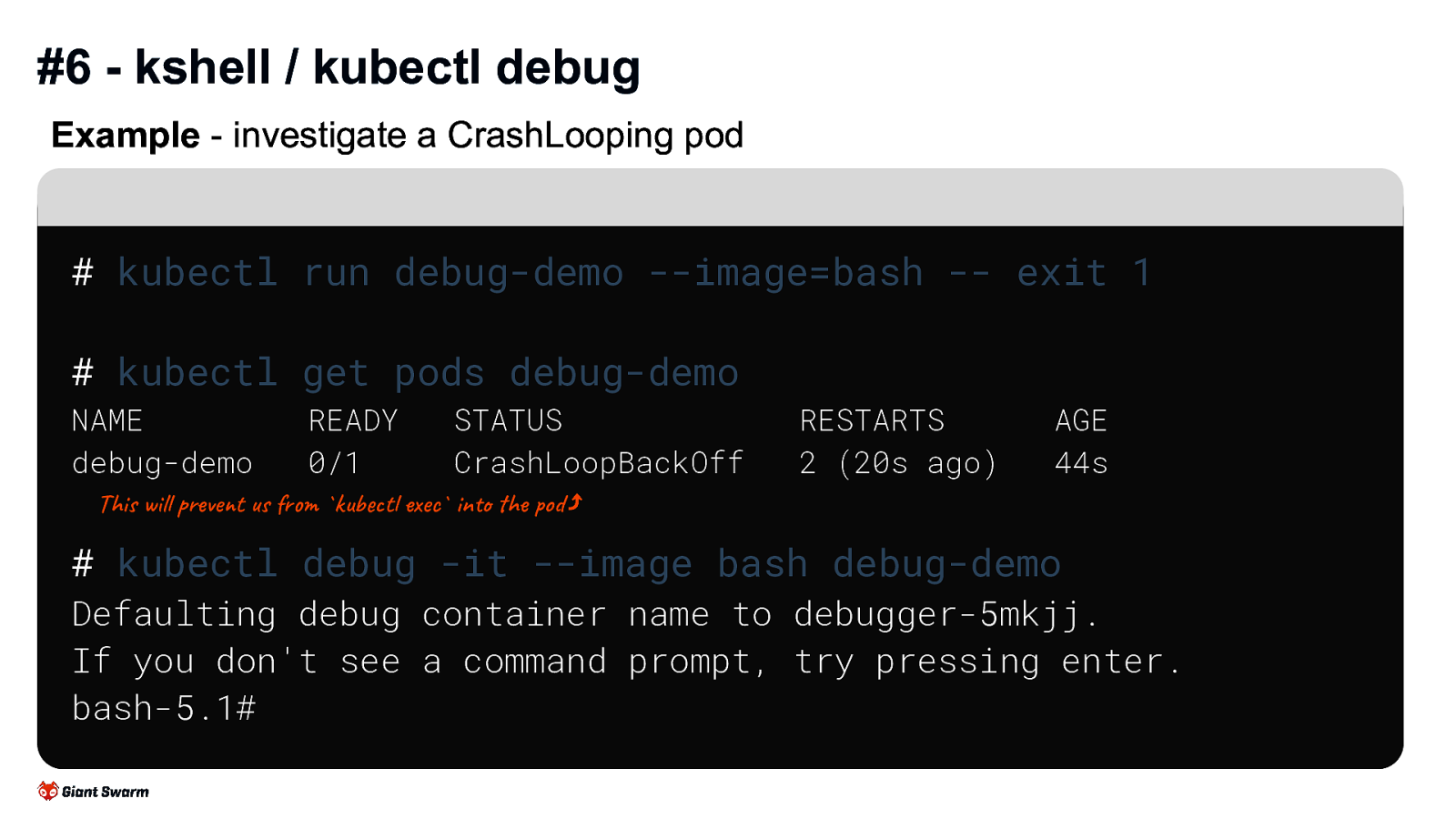

#6 - kshell / kubectl debug

Example - investigate a CrashLooping pod

kubectl run debug-demo —image=bash — exit 1

kubectl get pods debug-demo

NAME debug-demo READY 0/1 STATUS CrashLoopBackOff RESTARTS 2 (20s ago) AGE 44s

kubectl debug -it —image bash debug-demo

Defaulting debug container name to debugger-5mkjj. If you don’t see a command prompt, try pressing enter. bash-5.1#

kubectl debug has a few different modes: - launches an “ephemeral container” within the pod you’re debugging - kubectl debug - creates a copy of the pod with some values replaced (e.g. the image used) - kubectl debug –copy-to - launch a pod in the nodes host namespace to debug the node - kubectl debug node/my-node This has some limitations - cannot access all filesystem of failing container, only volumes that are shared

Slide 22

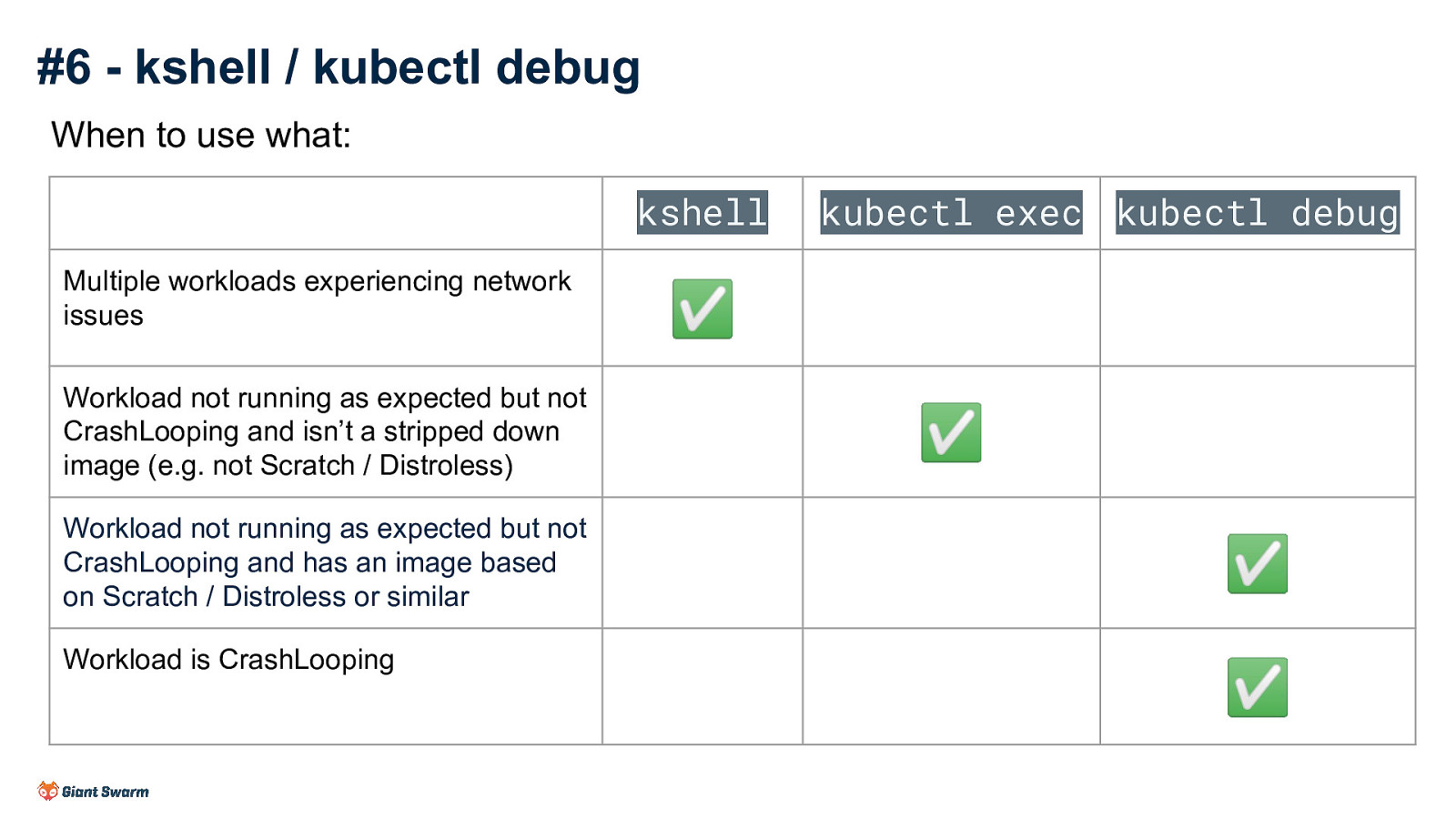

#6 - kshell / kubectl debug When to use what: kshell Multiple workloads experiencing network issues Workload not running as expected but not CrashLooping and isn’t a stripped down image (e.g. not Scratch / Distroless) Workload not running as expected but not CrashLooping and has an image based on Scratch / Distroless or similar Workload is CrashLooping kubectl exec kubectl debug ✅ ✅ ✅ ✅

Slide 23

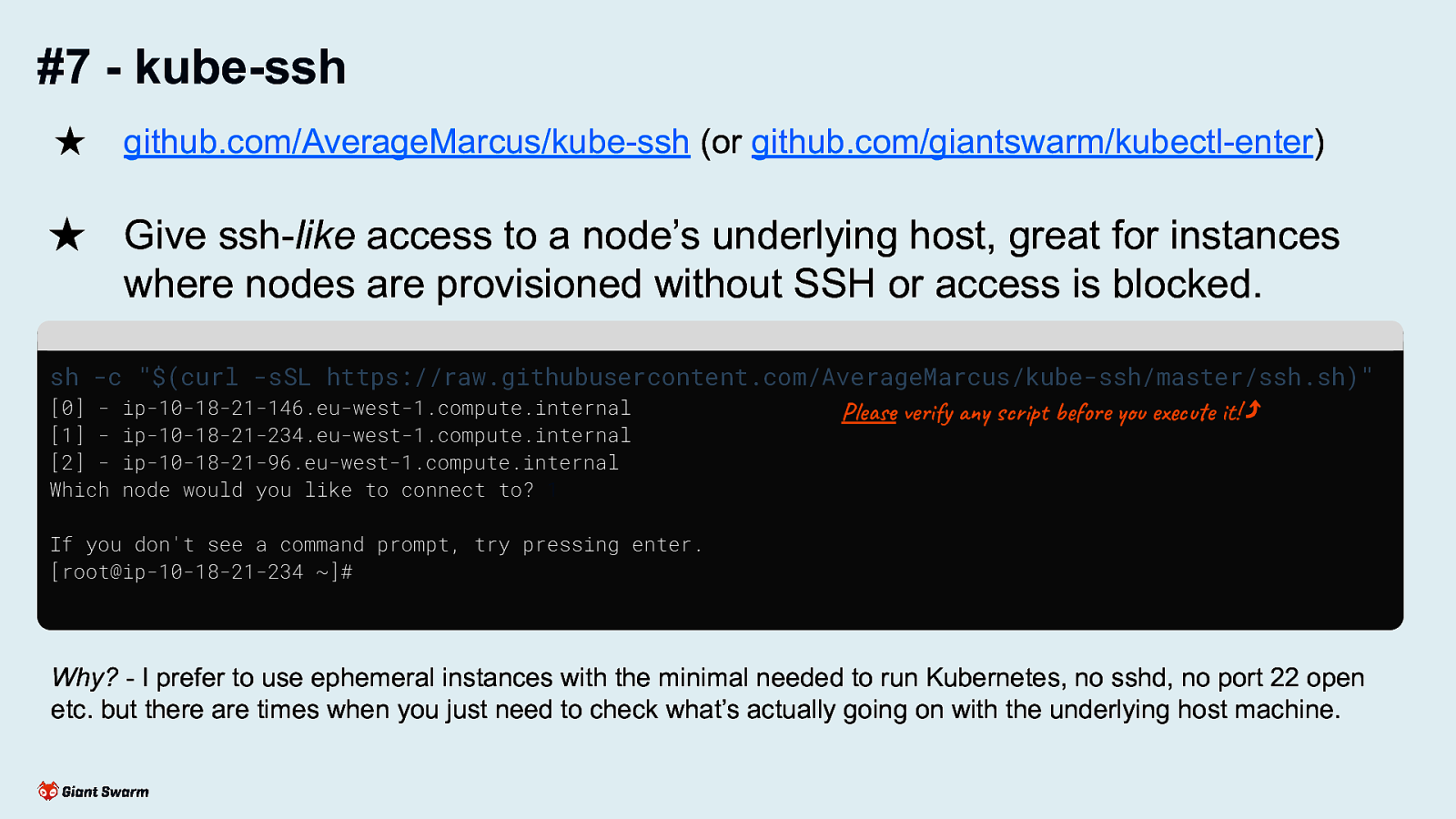

#7 - kube-ssh

Slide 24

#7 - kube-ssh ★ github.com/AverageMarcus/kube-ssh (or github.com/giantswarm/kubectl-enter) ★ Give ssh-like access to a node’s underlying host, great for instances where nodes are provisioned without SSH or access is blocked. sh -c “$(curl -sSL https://raw.githubusercontent.com/AverageMarcus/kube-ssh/master/ssh.sh)” [0] [1] [2] Which ip-10-18-21-146.eu-west-1.compute.internal ip-10-18-21-234.eu-west-1.compute.internal ip-10-18-21-96.eu-west-1.compute.internal node would you like to connect to? 1 Please verify any script before you execute it! ⤴ If you don’t see a command prompt, try pressing enter. [root@ip-10-18-21-234 ~]# Why? - I prefer to use ephemeral instances with the minimal needed to run Kubernetes, no sshd, no port 22 open etc. but there are times when you just need to check what’s actually going on with the underlying host machine. Always verify a shell script before you run it! Ideally, download it first and run that instead. Why? Smaller potential attack surface. Less chance of “hotfixes” or “tweaks” being forgotten about.

Slide 25

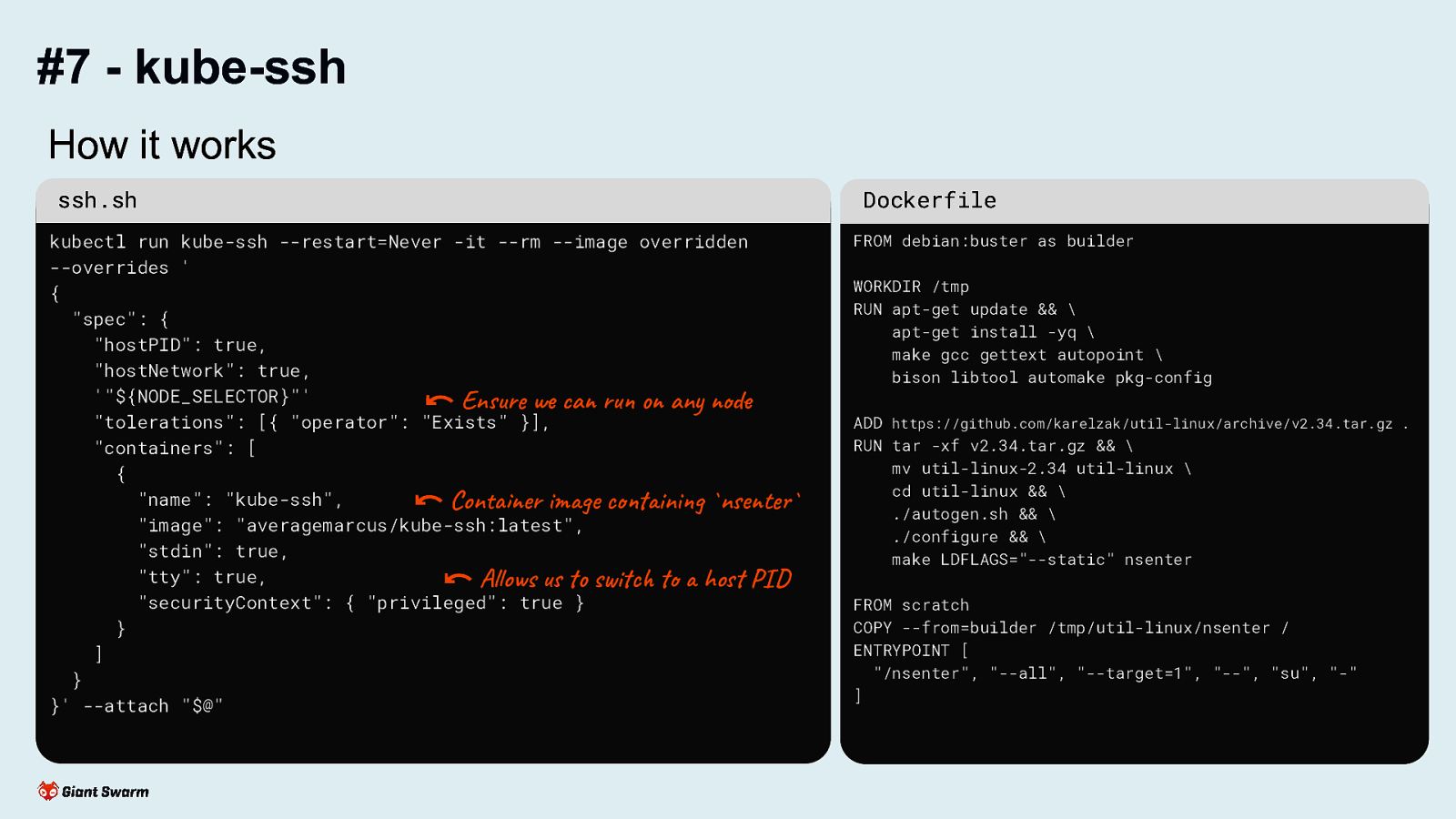

#7 - kube-ssh How it works ssh.sh

Dockerfile

kubectl run kube-ssh —restart=Never -it —rm —image overridden —overrides ’ { “spec”: { “hostPID”: true, “hostNetwork”: true, ‘”${NODE_SELECTOR}”’ Ensure we can run on any node “tolerations”: [{ “operator”: “Exists” }], “containers”: [ { “name”: “kube-ssh”, Container image containing nsenter “image”: “averagemarcus/kube-ssh:latest”, “stdin”: true, “tty”: true, Allows us to switch to a host PID “securityContext”: { “privileged”: true } } ] } }’ —attach “$@”

⃔

⃔

⃔

FROM debian:buster as builder WORKDIR /tmp RUN apt-get update && \ apt-get install -yq \ make gcc gettext autopoint \ bison libtool automake pkg-config ADD https://github.com/karelzak/util-linux/archive/v2.34.tar.gz . RUN tar -xf v2.34.tar.gz && \ mv util-linux-2.34 util-linux \ cd util-linux && \ ./autogen.sh && \ ./configure && \ make LDFLAGS=”—static” nsenter FROM scratch COPY —from=builder /tmp/util-linux/nsenter / ENTRYPOINT [ “/nsenter”, “—all”, “—target=1”, “—”, “su”, “-” ]

On the left: a snippet of the ssh.sh script that launches a new pod on the desired node (not shown here is the node selection logic) On the right: the Dockerfile of the image that is used for the pod. It just builds nsenter and uses it to switch to the host scope

Slide 26

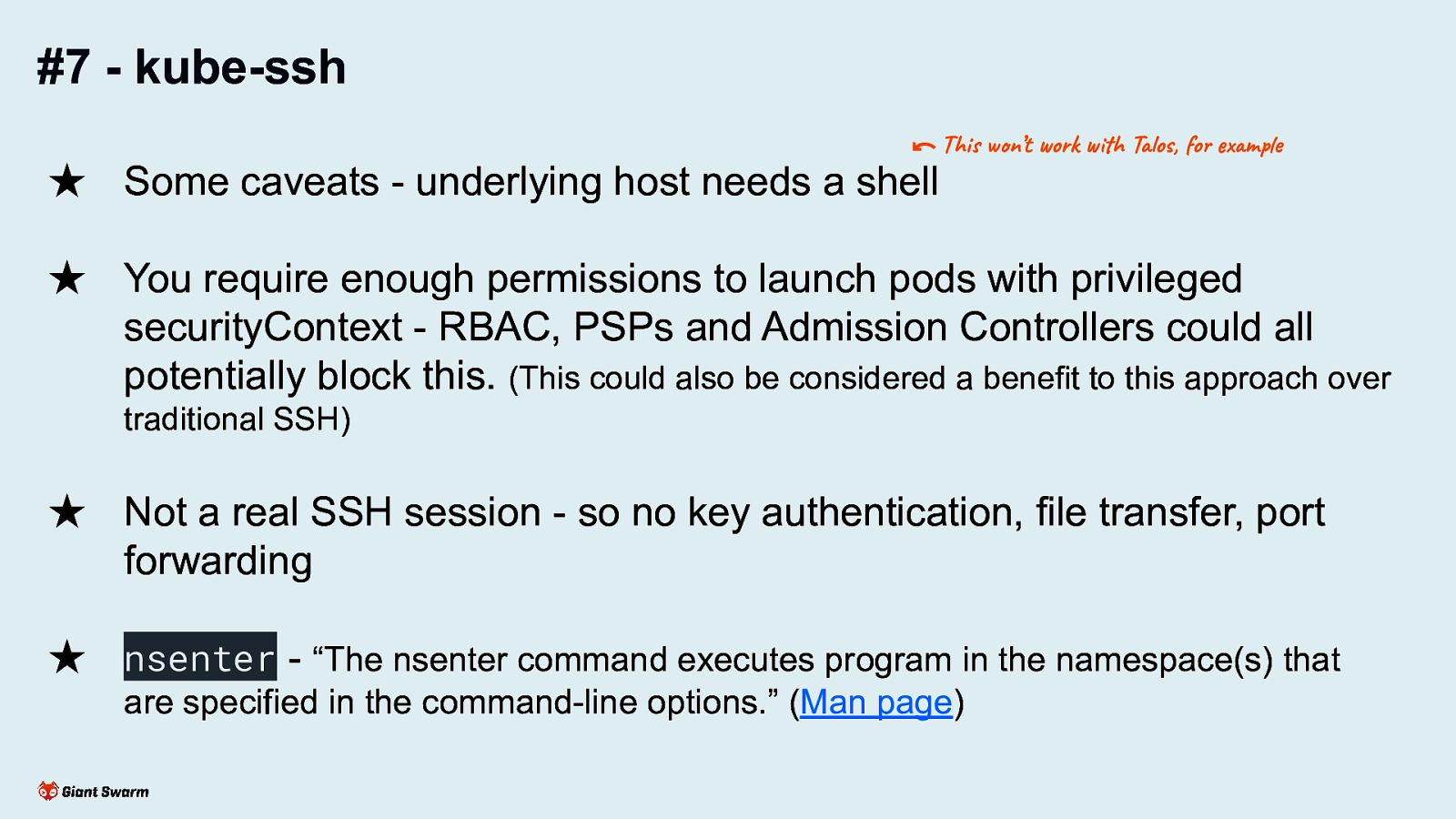

#7 - kube-ssh ⃔ This won’t work with Talos, for example ★ Some caveats - underlying host needs a shell ★ You require enough permissions to launch pods with privileged securityContext - RBAC, PSPs and Admission Controllers could all potentially block this. (This could also be considered a benefit to this approach over traditional SSH) ★ Not a real SSH session - so no key authentication, file transfer, port forwarding ★ nsenter - “The nsenter command executes program in the namespace(s) that are specified in the command-line options.” (Man page)

Slide 27

Summary My 10 tips for working with Kubernetes ✅ #1 → #5 Anyone can start using these today ✅ #6 → #7 Good to know a little old-skool ops first #8 → #10 Good have some programming knowledge

Slide 28

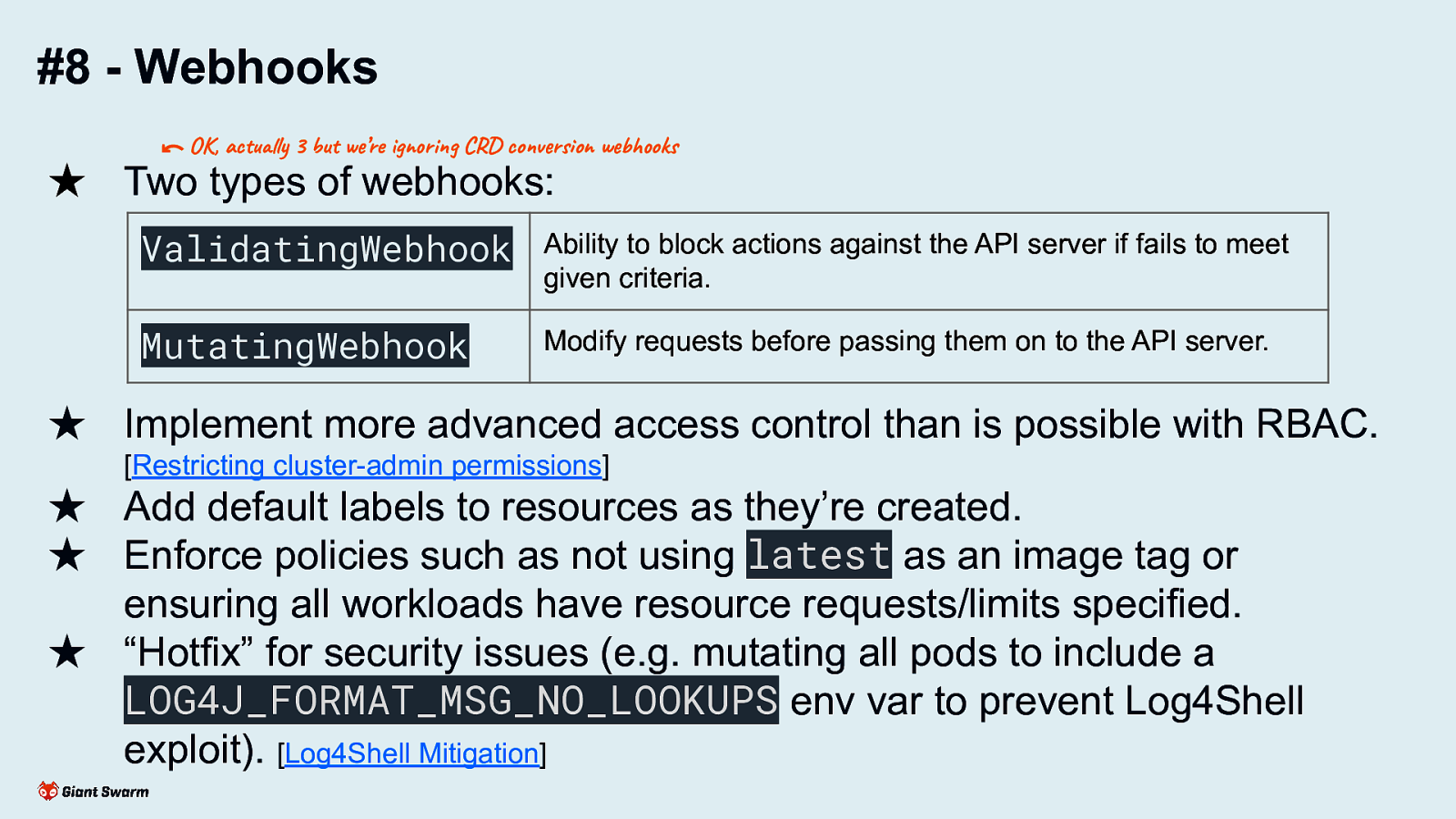

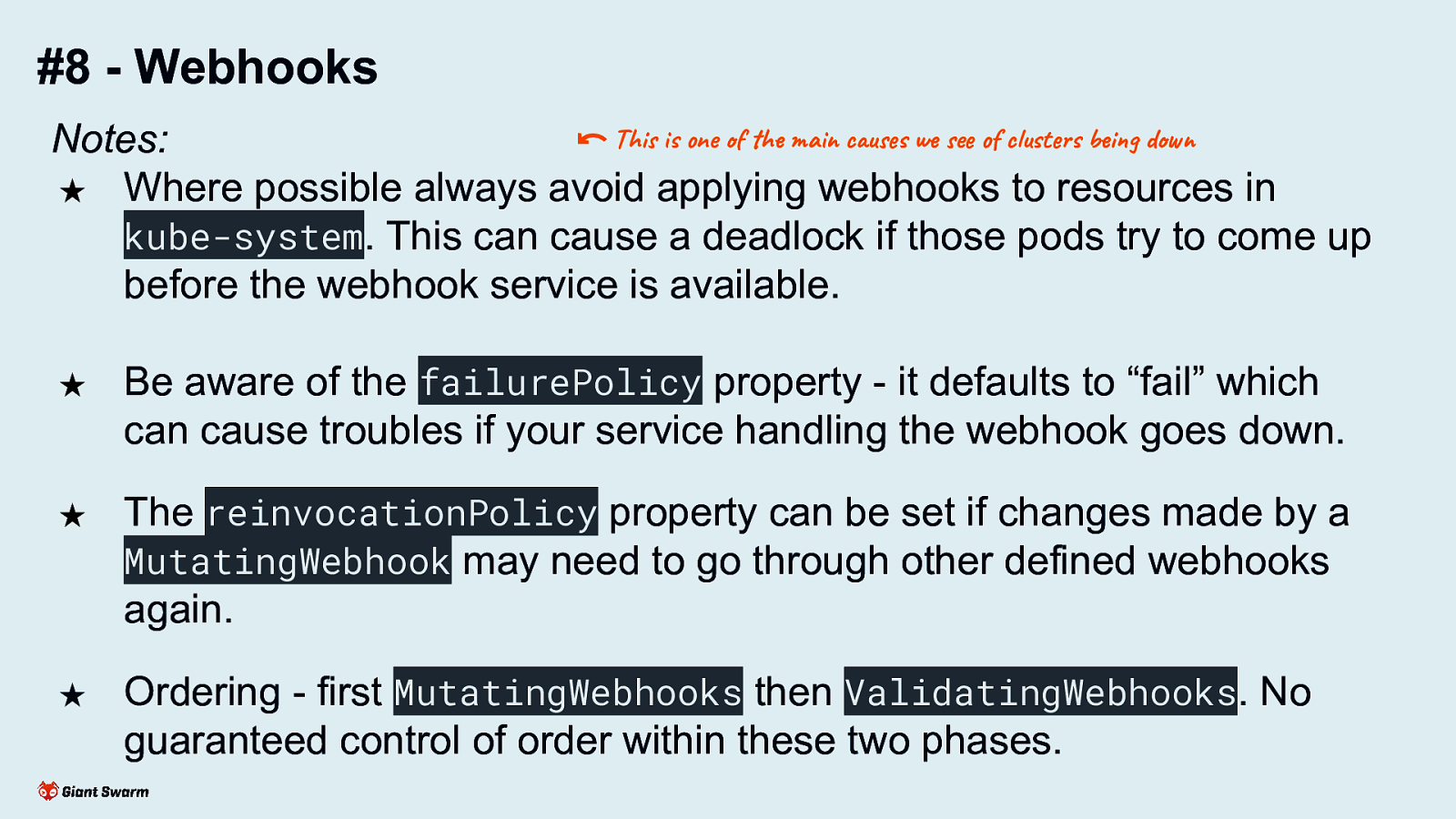

#8 - Webhooks

Slide 29

#8 - Webhooks ⃔ OK, actually 3 but we’re ignoring CRD conversion webhooks ★ Two types of webhooks: ValidatingWebhook Ability to block actions against the API server if fails to meet given criteria. MutatingWebhook Modify requests before passing them on to the API server. ★ Implement more advanced access control than is possible with RBAC. [Restricting cluster-admin permissions] ★ Add default labels to resources as they’re created. ★ Enforce policies such as not using latest as an image tag or ensuring all workloads have resource requests/limits specified. ★ “Hotfix” for security issues (e.g. mutating all pods to include a LOG4J_FORMAT_MSG_NO_LOOKUPS env var to prevent Log4Shell exploit). [Log4Shell Mitigation] Allows for subtractive access control (take away a users ability to perform a certain action against a certain resource) - something not possible with RBAC See blog post about how we avoided a nasty bug in our CLI tool with a ValidatingWebhook. https://www.giantswarm.io/blog/restricting-cluster-admin-permissions

Slide 30

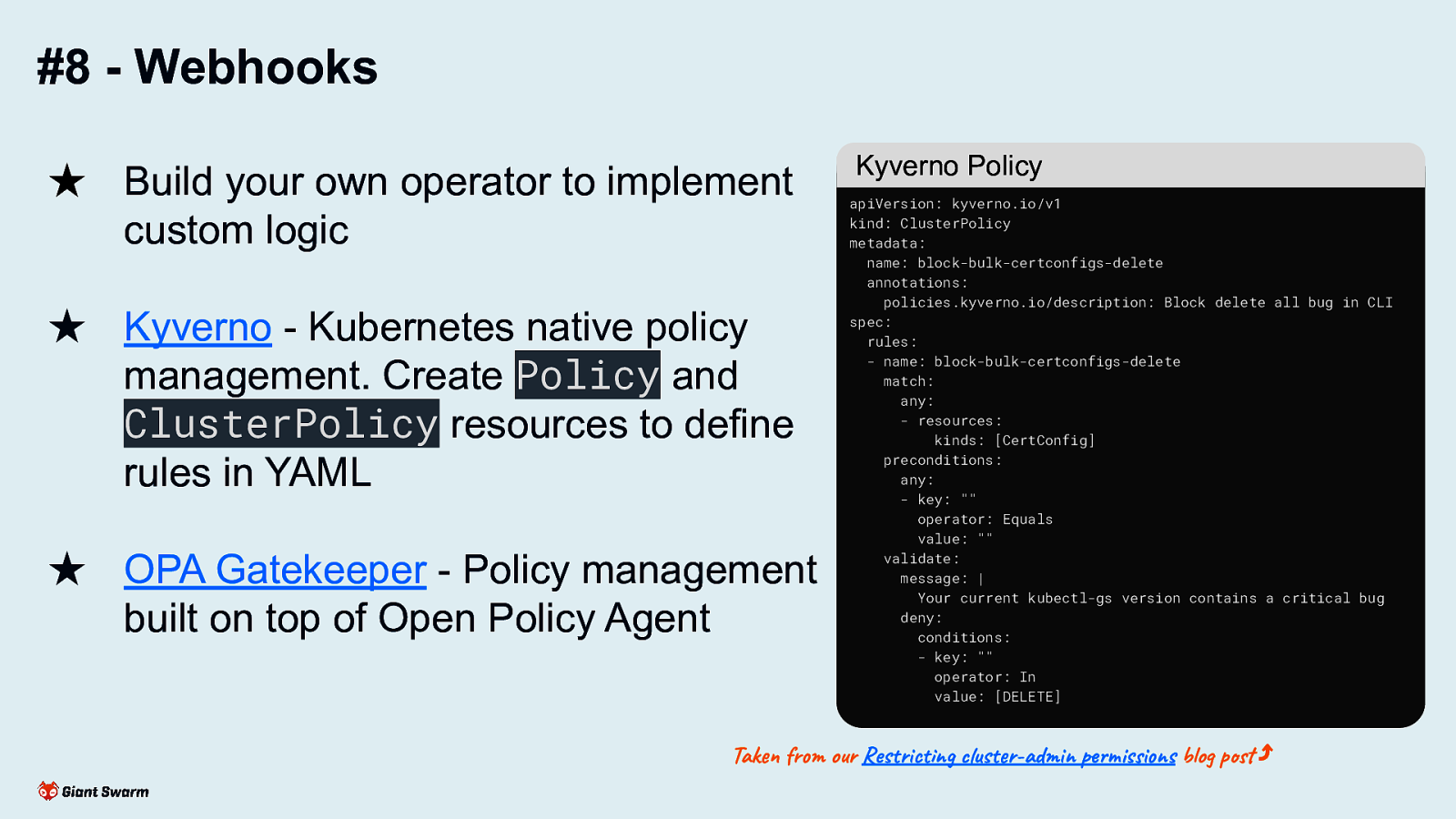

#8 - Webhooks ★ Build your own operator to implement custom logic ★ Kyverno - Kubernetes native policy management. Create Policy and ClusterPolicy resources to define rules in YAML ★ OPA Gatekeeper - Policy management built on top of Open Policy Agent Kyverno Policy apiVersion: kyverno.io/v1 kind: ClusterPolicy metadata: name: block-bulk-certconfigs-delete annotations: policies.kyverno.io/description: Block delete all bug in CLI spec: rules: - name: block-bulk-certconfigs-delete match: any: - resources: kinds: [CertConfig] preconditions: any: - key: “” operator: Equals value: “” validate: message: | Your current kubectl-gs version contains a critical bug deny: conditions: - key: “” operator: In value: [DELETE] Taken from our Restricting cluster-admin permissions blog post ⤴ Three most common approaches to leverage webhooks

Slide 31

#8 - Webhooks Notes: ⃔ This is one of the main causes we see of clusters being down ★ Where possible always avoid applying webhooks to resources in kube-system. This can cause a deadlock if those pods try to come up before the webhook service is available. ★ Be aware of the failurePolicy property - it defaults to “fail” which can cause troubles if your service handling the webhook goes down. ★ The reinvocationPolicy property can be set if changes made by a MutatingWebhook may need to go through other defined webhooks again. ★ Ordering - first MutatingWebhooks then ValidatingWebhooks. No guaranteed control of order within these two phases. Webhooks can break a cluster. Make sure your service is resilient and that your webhooks don’t block critical workloads. Webhooks can be backed by either services within the cluster or pointing to an URL outside of the cluster.

Slide 32

#9 - Kubernetes API

Slide 33

#9 - Kubernetes API All Kubernetes operations are done via the API - kubectl uses it, in-cluster controllers use it, the scheduler uses it and you can use it too! ✨ The API can also be extended by either: ● the creation of Custom Resource Definitions (CRDs) ● implementing an Aggregation Layer (such as what metrics-server implements). We’re not going to cover this today ⤴

Slide 34

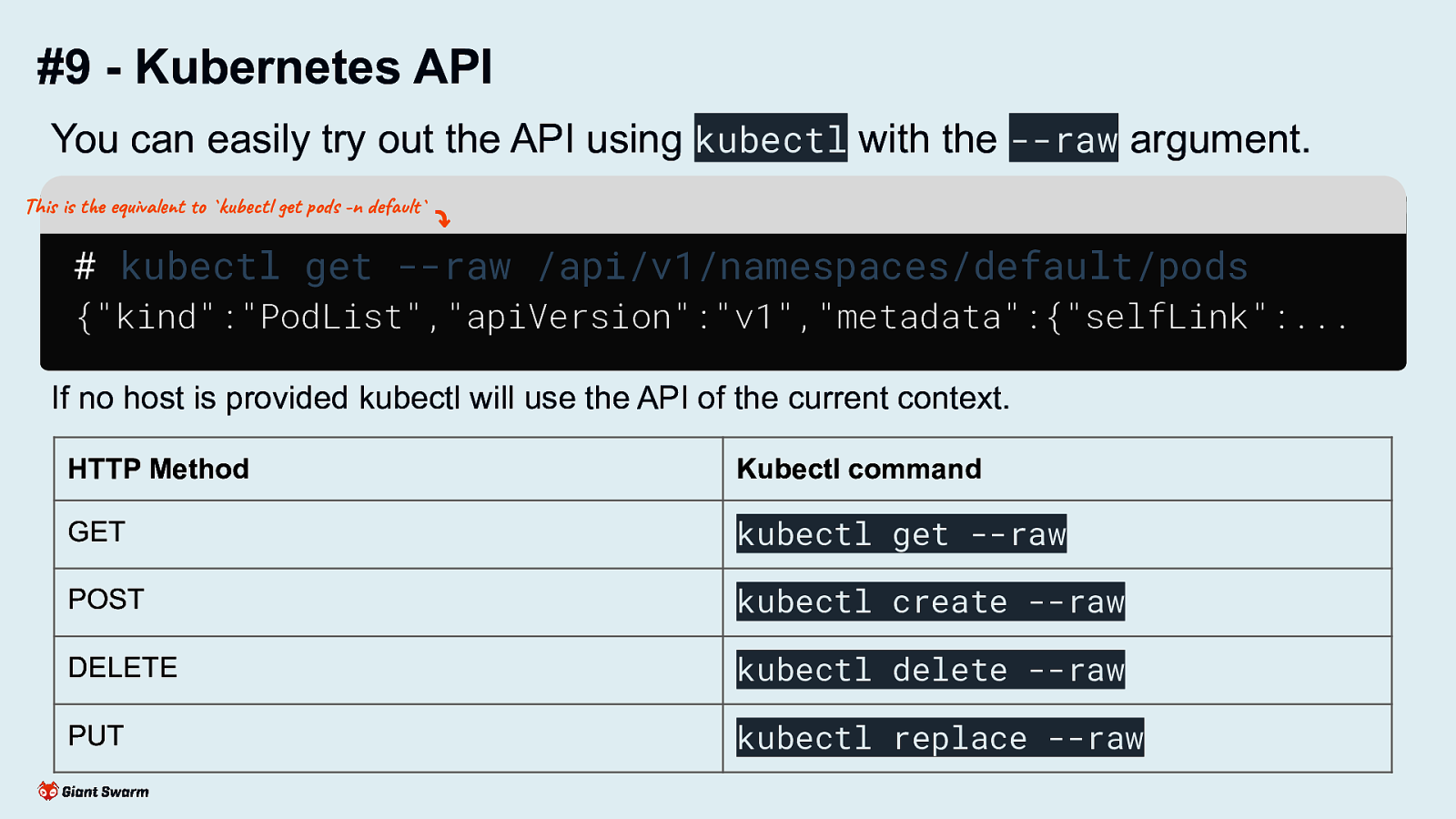

#9 - Kubernetes API You can easily try out the API using kubectl with the —raw argument. This is the equivalent to kubectl get pods -n default

⤵

kubectl get —raw /api/v1/namespaces/default/pods {“kind”:”PodList”,”apiVersion”:”v1”,”metadata”:{“selfLink”:… If no host is provided kubectl will use the API of the current context. HTTP Method

Kubectl command GET kubectl get —raw POST kubectl create —raw DELETE kubectl delete —raw PUT kubectl replace —raw To target another cluster not set as your current kubeconfig context you can specify the full URL of the endpoint. Be aware that not all kubectl commands map to a single API call. Lots do several API calls under the hood.

Slide 35

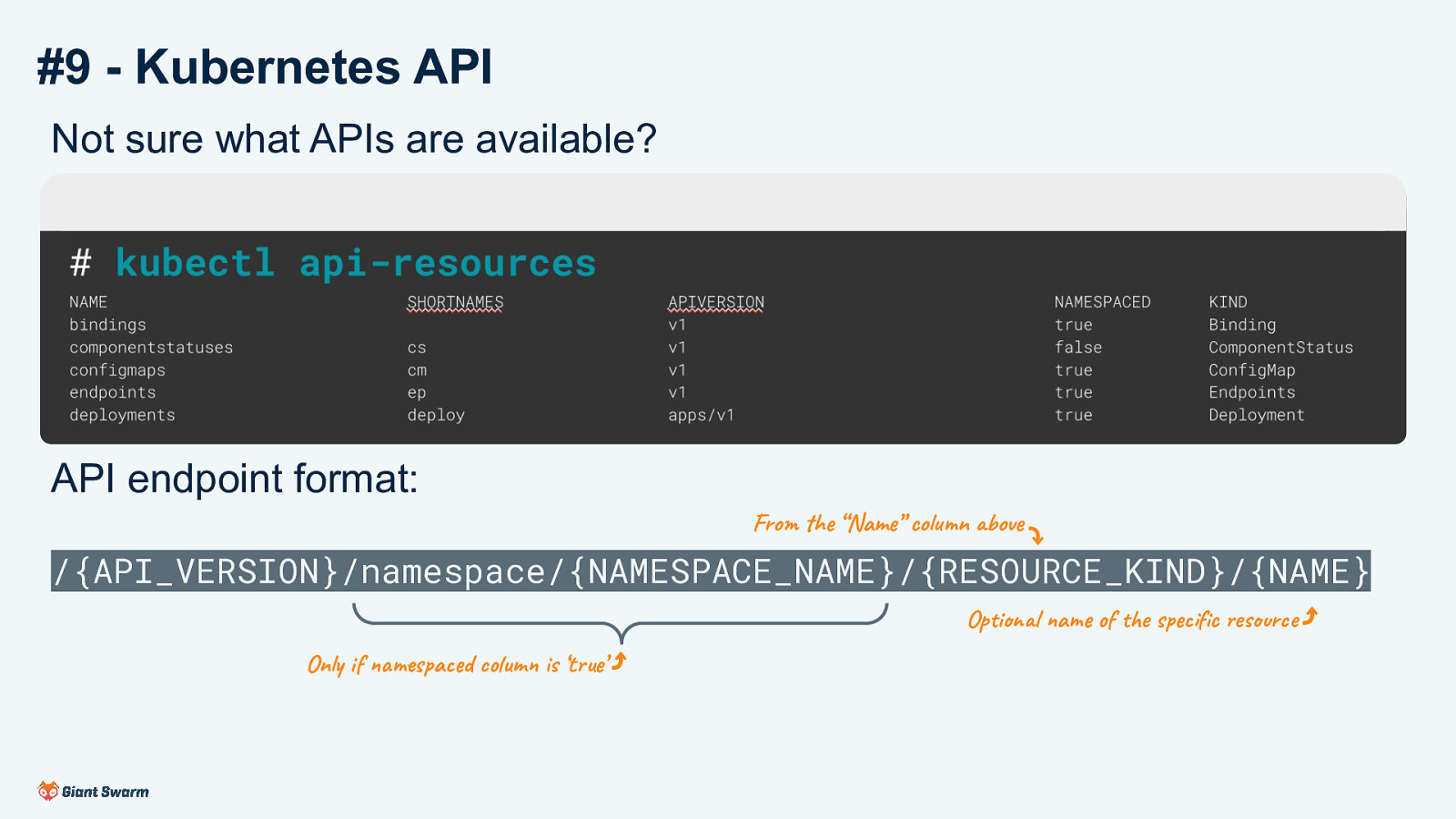

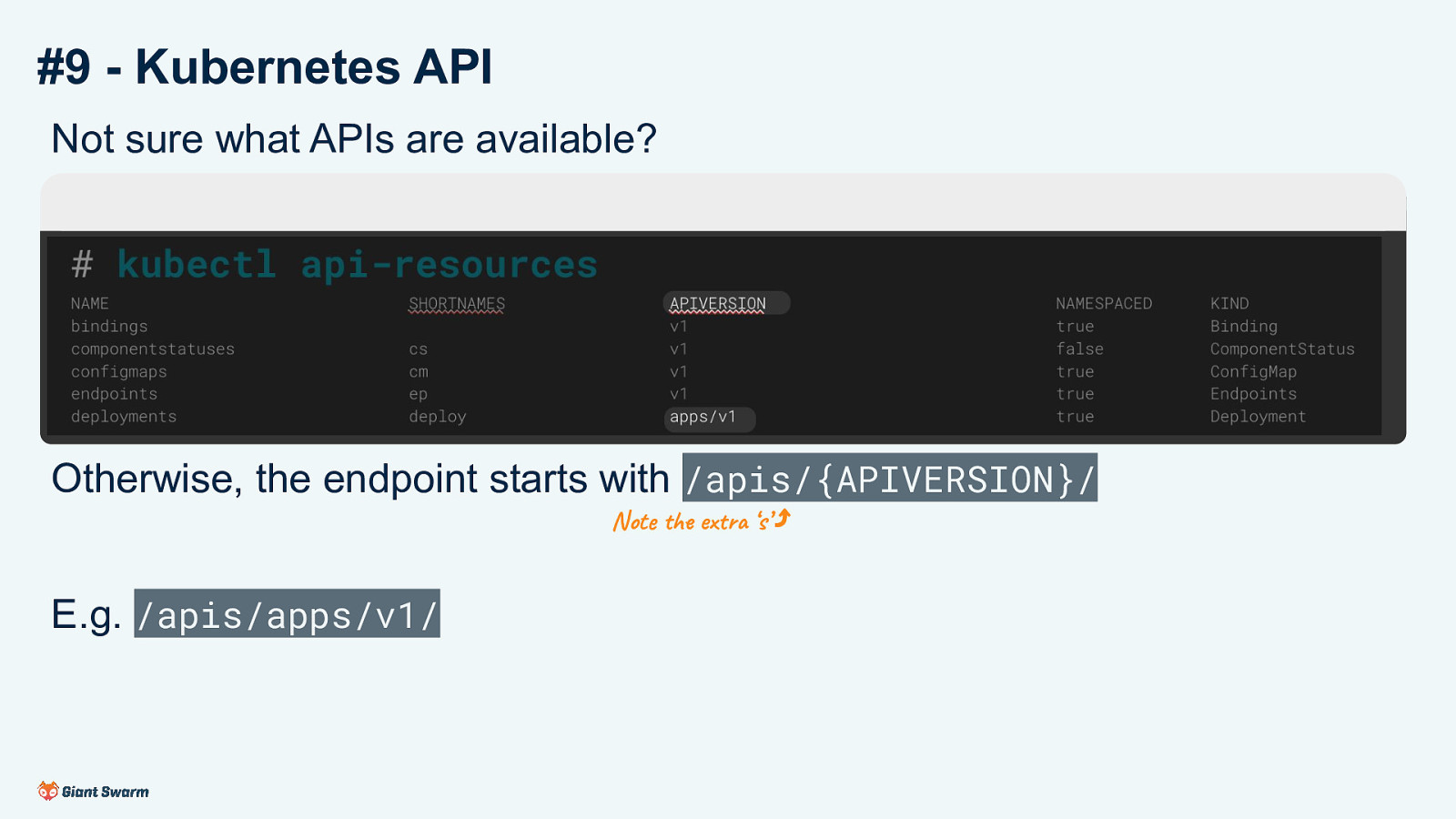

#9 - Kubernetes API Not sure what APIs are available? # kubectl api-resources NAME bindings componentstatuses configmaps endpoints deployments SHORTNAMES cs cm ep deploy APIVERSION v1 v1 v1 v1 apps/v1 NAMESPACED true false true true true KIND Binding ComponentStatus ConfigMap Endpoints Deployment API endpoint format: From the “Name” column above ⤵ /{API_VERSION}/namespace/{NAMESPACE_NAME}/{RESOURCE_KIND}/{NAME} Optional name of the specific resource ⤴ Only if namespaced column is ‘true’ ⤴

Slide 36

#9 - Kubernetes API Not sure what APIs are available? # kubectl api-resources NAME bindings componentstatuses configmaps endpoints deployments

SHORTNAMES cs cm ep deploy

APIVERSION v1 v1 v1 v1 apps/v1

This is the “core” API ⤴

NAMESPACED true false true true true

If APIVERSION is just v1 the endpoint starts with /api/v1/ E.g. /api/v1/componentstatuses

The “core” API is accessible on /api/v1

KIND Binding ComponentStatus ConfigMap Endpoints Deployment

Slide 37

#9 - Kubernetes API Not sure what APIs are available? # kubectl api-resources NAME bindings componentstatuses configmaps endpoints deployments

SHORTNAMES cs cm ep deploy

APIVERSION v1 v1 v1 v1 apps/v1

NAMESPACED true false true true true

KIND Binding ComponentStatus ConfigMap Endpoints Deployment

Otherwise, the endpoint starts with /apis/{APIVERSION}/ Note the extra ‘s’ ⤴

E.g. /apis/apps/v1/

APIs added to kubernetes in later versions are available on the /apis endpoint. This include the built-in ones like Deployments as well as Custom Resources

Slide 38

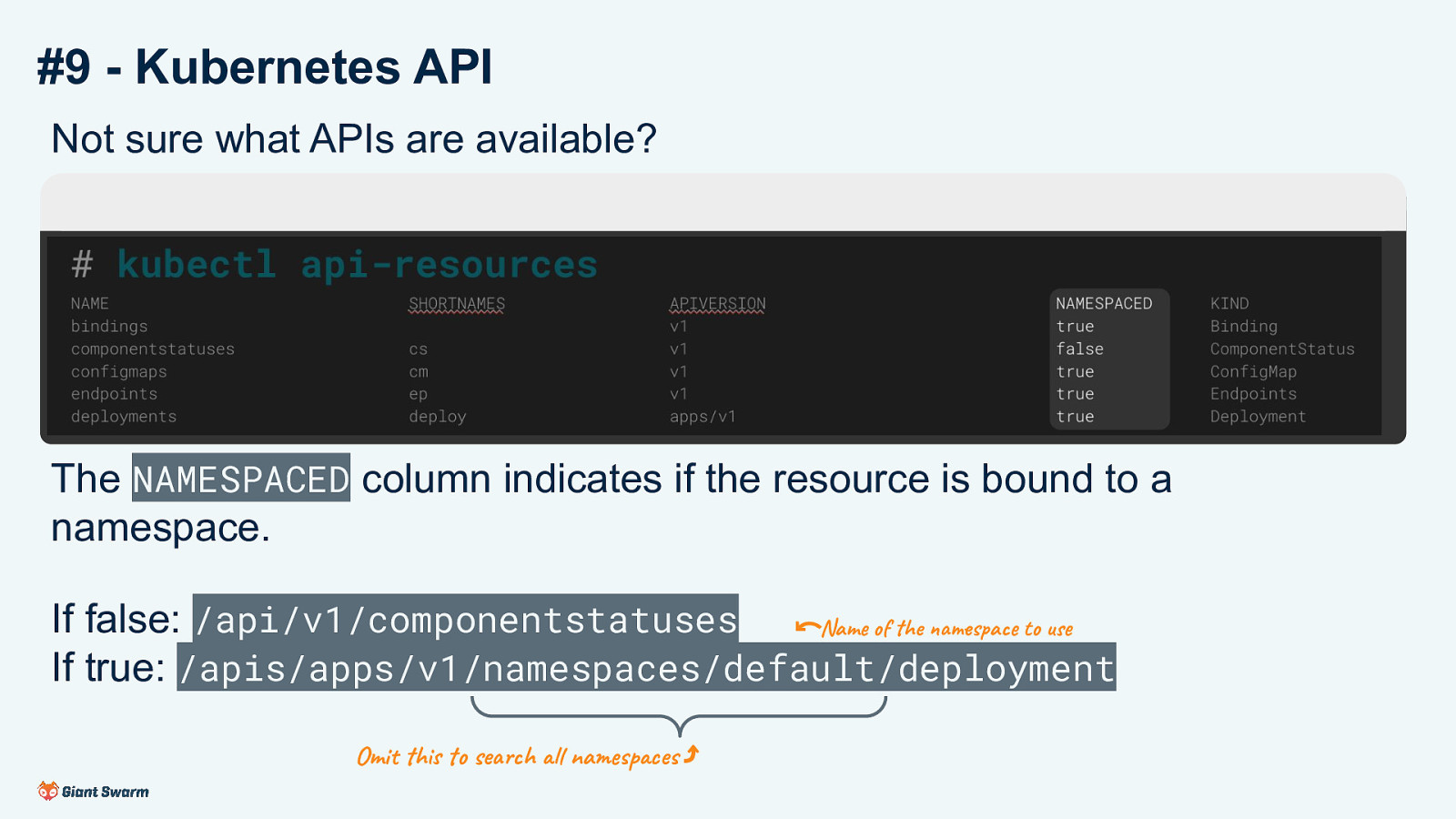

#9 - Kubernetes API Not sure what APIs are available? # kubectl api-resources NAME bindings componentstatuses configmaps endpoints deployments SHORTNAMES cs cm ep deploy APIVERSION v1 v1 v1 v1 apps/v1 NAMESPACED true false true true true The NAMESPACED column indicates if the resource is bound to a namespace. If false: /api/v1/componentstatuses Name of the namespace to use ⃔ If true: /apis/apps/v1/namespaces/default/deployment Omit this to search all namespaces ⤴ KIND Binding ComponentStatus ConfigMap Endpoints Deployment

Slide 39

#9 - Kubernetes API Resources: - kubernetes/client-go - the official Golang module for interacting with the Kubernetes API - Kubernetes Provider for Terraform (actually uses the above Go module under the hood) - kubernetes-client org on GitHub has many official clients in different languages Where is this useful? ★ Building our own CLI / desktop tooling (e.g. k9s, Lens). ★ Cluster automation - resources managed by CI, CronJobs, etc. ★ Building our own operators to extend Kubernetes. Make use of one of the many client libraries available rather than interacting with the REST endpoint directly. Plenty more official clients available at https://github.com/kubernetes-client

Slide 40

#10 - CRDs & Operators

Slide 41

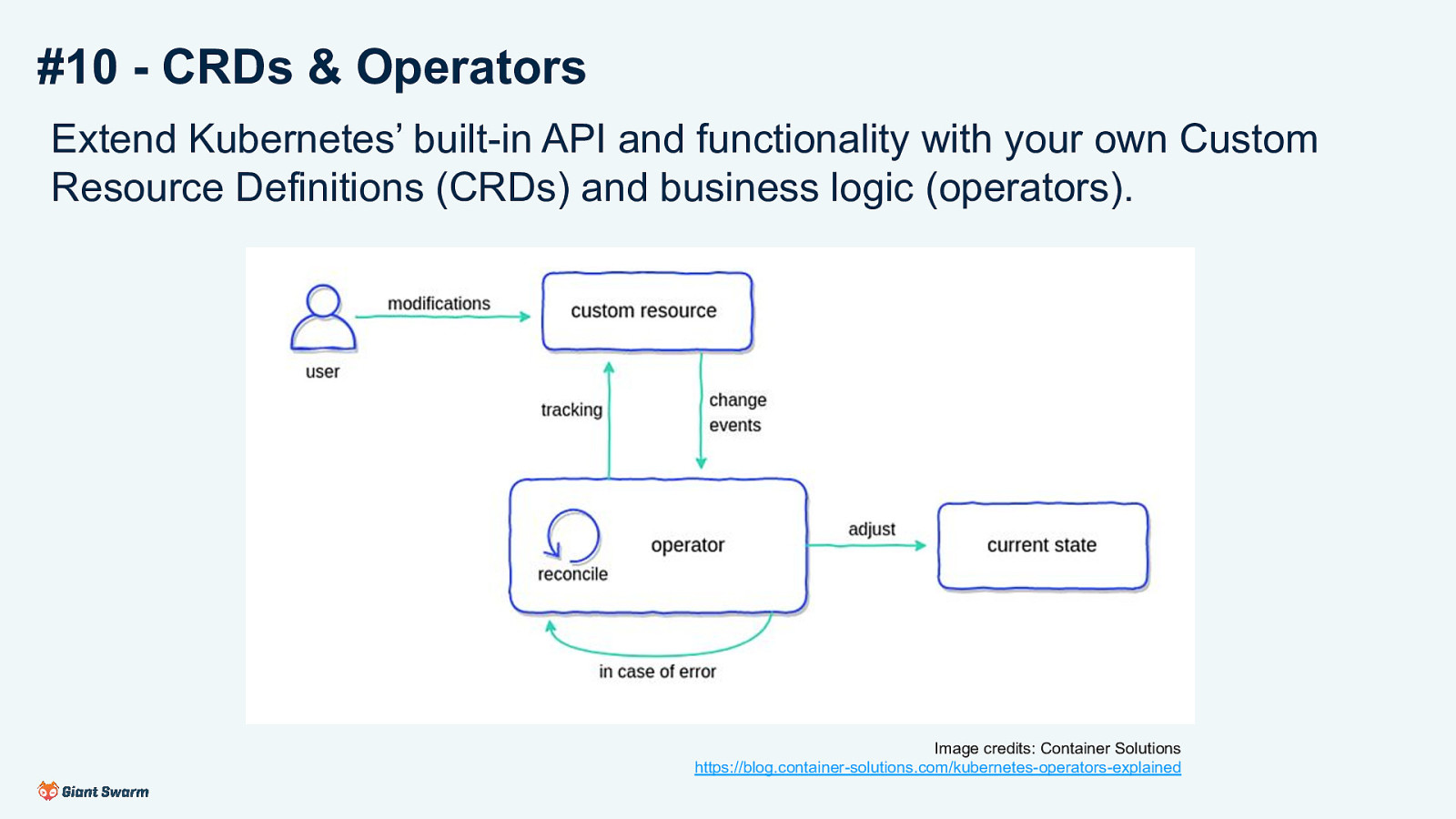

#10 - CRDs & Operators Extend Kubernetes’ built-in API and functionality with your own Custom Resource Definitions (CRDs) and business logic (operators). Image credits: Container Solutions https://blog.container-solutions.com/kubernetes-operators-explained We’ve already seen hints of this already in this talk. Kyverno implements CRDs and has an operator that manages them. We mentioned extending the Kubernetes API with custom resources, giving us the programmatic access for our operators.

Slide 42

#10 - CRDs & Operators Frameworks Metacontroller References ● https://kubernetes.io/docs/concepts/extend-kubernetes/operator/ ● https://blog.container-solutions.com/kubernetes-operators-explained ● https://operatorhub.io/ - Directory of existing operators Videos This topic is too large to cover within this talk, there are already plenty of better resources available. Kubebuilder tends to be the most popular framework and used by all of the cluster-api projects.

Slide 43

Summary My 10 tips for working with Kubernetes ✅ #1 → #5 Anyone can start using these today ✅ #6 → #7 Good to know a little old-skool ops first ✅ #8 → #10 Good have some programming knowledge

Slide 44

Recap #1 - Love your terminal #6 - kshell / kubectl debug #2 - Learn to love kubectl #7 - kube-ssh #3 - Multiple kubeconfigs #8 - Webhooks #4 - k9s #9 - Kubernetes API #5 - Kubectl plugins #10 - CRDs & Controllers

Shell aliases and helpers Alias k, kubectl explain Kubeswitch Interactively work with clusters Krew. Build your own with bash. Kubectl- prefixed name Pod debugging Node debugging Validating and mutating requests to the Kubernetes API Working directly with the API to build our own logic Extending Kubernetes with our own resources and logic

Slide 45

Thank You 🧡 All resources linked in this presentation are also available at that link.